Text Posts

Though HTTPS has been an option for my site for a little while now, I haven't enforced it outside of various commerce related pages (e.g. the shopping cart). Starting now, not only is HTTPS required to browse my site, I've enabled the HSTS header to ensure that unencrypted connections are never allowed.

What is HSTS?

HSTS stands for "HTTP Strict Transport Security". This does a couple of things for the modern browsers that follow this header--

- Any links on my website that point elsewhere on this same domain will automatically be changed to HTTPS. I have used protocol relative URLs or CMS-generated URLs most everywhere, but this should ensure that anything I've missed will stay encrypted.

- For the defined period of time (I've chosen a year), if you connect to my site and there are any HTTPS related issues such as an expired certificate or invalid certificate, your browser should not even allow you to bypass the warning message.

Why HSTS?

Of course, given that you will be denied access to my website if I accidentally misconfigure something related to SSL or even if my CA's certificate has problems, why would I take the risk of downtime and lost traffic in the same of encryption?

My website is primarily a blog-- it does allow you to purchase various tutorials I've written and apps I have developed, but the vast majority of my visitors land on a content page with no sensitive information. Despite this, I believe that each user is entitled to both privacy, as well as security from ISPs and establishments that perform bad practices such as ad injection. With enforced HTTPS, your hotel or coffee shop will no longer be able to see what content you are specifically looking at1, and will not be able to inject their own ads or tracking software into my website.

- Of course, without encrypted DNS, an attacker or privileged user will still know you are accessing my domain ↩

PHP is an interesting language, and to many it is considered a language that is archaic and badly designed. In fact, I largely agree that PHP's design is not optimal, but there is no other language in the world that is both easy to learn and deployable on almost any shared hosting service so easily. This is changing, but for now, PHP is here to stay.

By design, PHP does not have explicit typing-- a variable can be any type, and can change to any type at any time. This is in stark contrast to other languages, such as Apple's Swift, Java, and many others. Depending on your background, you may consider PHP's lack of explicit typing to be dangerous.

Not only this, but PHP is not the most performant language by any means. You can see this for yourself in TechEmpower's famous framework benchmarks. These results clearly show that PHP is at or near the bottom of the pile, being beat outright by languages such as Java and Go.

So, how do you make one of the most popular languages in the world for web applications usable again? Many say that PHP simply needs to be killed off entirely, but Facebook disagrees.

HHVM is a project designed to revitalize PHP. HHVM often beats PHP in performance benchmarks, and supports a new explicitly typed language-- Hack. Hack, sometimes referred to as "Hacklang" so that it you can actually search for it on Google, is almost completely compatible with standard PHP. With the exception of a few quarks, any PHP file can also be a valid Hack file. From there, you can take advantage of new features such as explicit typing, collections, and generics. For example:

<?hh

class MyClass {

public function add(int $one, int $two): int {

return $one + $two;

}

}

As you can see, Hack is very similar to PHP. In fact, Hack is really just an extension of PHP since you can simply begin any PHP code with the <? hh tag to make it a valid Hack file.

So, how do you get started with HHVM and Hack? Unfortunately, Mac OS X and Windows binaries are not provided officially, and though you can install HHVM yourself by compiling it on Mac, it's certainly not the most convenient. An even better way of trying out Hack is to simply use a Linux server. One of my go-to providers for cheap "testing" servers on demand is DigitalOcean, who provides SSD cloud servers starting at $0.007 an hour. Of course, this tutorial applies to any server provider or even a local VM, so you can follow the steps regardless who your provider is.

Booting Up a Server

First, you'll need an Ubuntu server-- preferably 14.04, though any version from 12.04 up will work fine, as does many other flavors of Linux.

On DigitalOcean, you can get started by registering a new account or using your existing one. If you're a new user to DigitalOcean, you may even be able to find a coupon code for $5-$10 in credit (such as the coupon code ALLSSD10, which should be working as of July 2014).

Once you've registered on DigitalOcean, you can launch a new "Droplet" (DigitalOcean's term for a virtual machine or VPS) with the big green "Create" button on the left side of your dashboard.

Go ahead and enter any hostname you want, and choose a server size. You can also choose any Droplet size you wish, including the baseline 512 MB RAM Droplet. If you're planning on running anything in production on this server or wish to have a little more headroom, you may wish to choose the slightly larger 1 GB RAM Droplet.

Next, you can choose the region closest to yourself (or your visitors if you're using this as a production server). DigitalOcean has six different data centers at the moment, including New York, San Francisco, Singapore, and Amsterdam. Different data centers have different features such as private networking and IPv61, though these features are slated to roll out to all data centers at some point in time.

Finally, choose the Ubuntu 14.04 image and create your Droplet. It'll only take around 60 seconds to do so, and once the Droplet is running SSH into the server using the credentials sent to you or your SSH key if you've set up SSH authentication.

Installing HHVM

HHVM is relatively easy to install on Ubuntu, but varies based on your Ubuntu version. The main difference between the commands below is simply the version name when adding the repository to your sources.

Ubuntu 14.04

wget -O - http://dl.hhvm.com/conf/hhvm.gpg.key | sudo apt-key add -

echo deb http://dl.hhvm.com/ubuntu trusty main | sudo tee /etc/apt/sources.list.d/hhvm.list

sudo apt-get update

sudo apt-get install hhvm

Ubuntu 13.10

wget -O - http://dl.hhvm.com/conf/hhvm.gpg.key | sudo apt-key add -

echo deb http://dl.hhvm.com/ubuntu saucy main | sudo tee /etc/apt/sources.list.d/hhvm.list

sudo apt-get update

sudo apt-get install hhvm

Ubuntu 13.04

Ubuntu 13.04 isn't officially supported or recommended to use.

Ubuntu 12.04

sudo add-apt-repository ppa:mapnik/boost

wget -O - http://dl.hhvm.com/conf/hhvm.gpg.key | sudo apt-key add -

echo deb http://dl.hhvm.com/ubuntu precise main | sudo tee /etc/apt/sources.list.d/hhvm.list

sudo apt-get update

sudo apt-get install hhvm

If you've having issues with the add-apt-repository command on Ubuntu 12.04, then you may need to run sudo apt-get install python-software-properties.

Running HHVM

Once you've installed HHVM, you can run it on the command line as hhvm. Once you create a new Hack file with the following contents, try and run it with hhvm [filename.

<?hh

echo "Hello from HHVM " . HHVM_VERSION;

Note the lack of a closing tag-- in Hack, there are no closing tags and HTML is not allowed inline.

Installing and Setting Up Nginx

Of course, installing HHVM for the command line is the easy part. To actually serve traffic to HHVM using Nginx, you have to set HHVM up as a fast-cgi module. To do so, first install Nginx with sudo apt-get install nginx and start it with sudo service nginx start. To verify that Nginx installed correctly, visit your Droplet's IP address and you should see the Nginx default page.

Now, we can remove the default Nginx websites with the following commands:

sudo rm -f /etc/nginx/sites-available/*

sudo rm -f /etc/nginx/sites-enabled/*

Then, create a new configuration file for your website as /etc/nginx/sites-available/hhvm-site. You can change the name of the configuration file if you wish. The contents of the file should be similar to the one of following:

Emulating "mod_rewrite"

The Nginx equivalent of sending all requests to a single index.php file is as follows. Every request to this server will be sent to the index.php file, which is perfect for frameworks such as Laravel.

server {

# Running port

listen 80;

server_name www.example.com;

# Root directory

root /var/www;

index index.php;

location / {

try_files $uri @handler;

}

location @handler {

rewrite / /index.php;

}

location ~ \.php$ {

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

}

Traditional Setup

In this example, any requests to a script ending in .php will be executed by HHVM. For example, if you have hello.php in your web root, navigating to http://www.example.com/hello.php would cause the hello.php file to be executed by HHVM.

server {

# Running port

listen 80;

server_name www.example.com;

# Root directory

root /var/www;

index index.php;

location ~ \.php$ {

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

}

Also, ensure that you change all instances of the web root (/var/www) in the above configuration files to your own web root location, as well as the server_name. Alternatively, you can leave the web root as /var/www and just put your Hack files in that folder.

Now that you've created the file under sites-available, you can symlink it to the sites-enabled folder to enable it in Nginx.

sudo ln -s /etc/sites-available/hhvm-site /etc/sites-enabled/hhvm-site

Before you restart Nginx to apply the changes, start the HHVM fast-cgi enabled server with hhvm --mode daemon -vServer.Type=fastcgi -vServer.Port=9000. After the HHVM daemon is started, you can then run sudo service nginx restart to apply your Nginx configuration changes. If you have a Hack file in your web root, you should be able to visit your Droplet's IP and see the response.

Starting HHVM on Boot

HHVM currently does not automatically start up when your server is restarted. To change this, you can simply add the line below into the file named /etc/rc.local to run it on boot:

/usr/bin/hhvm --mode daemon -vServer.Type=fastcgi -vServer.Port=9000

HHVM should now start when your server boots up.

You should now have HHVM and Hack up and running on your server-- make sure you take a look at Hack's documentation for more information on the features of the language.

- Singapore is the first region with IPv6 support, while New York 2, Amsterdam 2, and Singapore have private networking. ↩

Over the course of two days in a relatively quiet area of south Seattle, one of the biggest companies in technology took over a quiet building called Sodo Park.

The space, a small, old looking building, is commonly used for events such as weddings, holiday parties, and other corporate gatherings. From the outside, it wasn't apparent anything was occurring at all-- only a few lone parking signs across the street gave any hint of the company's presence. But as you walked to the front door, flanked by a couple employees in nondescript black T-Shirts, it was apparent that this was more than just a "corporate event."

Stepping inside revealed a large, open space filled with mingling event staff and visitors. After I entered through the door, I was herded to a table at which I was greeted by smiling Google employees sitting behind their Chromebook Pixel laptops. After I filled out a media release form and checked in my jacket, I walked into a short queue to be introduced to the functionality of Glass. Behind me were several clear cases containing prototypes of the technology-- smartphones affixed to glasses frames seemed to be a common theme.

At this point, I had a chance to look around the room. Though I had missed it earlier, employees were walking around the room with Glass on. No matter where you were in the room, you were being watched by tiny cameras mounted on each staff member's head.

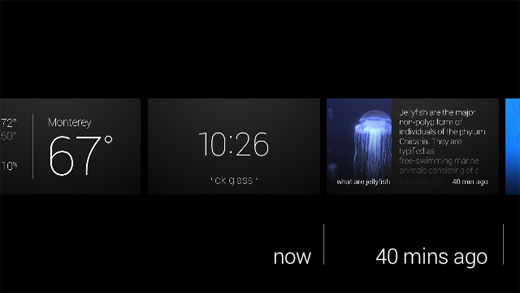

Several minutes later, a friendly woman came over with a Android tablet to welcome the individuals in the queue to the "Seattle Through Glass" event, and gave a quick demonstration of the gestures. The tablet, which was paired to the glasses, displayed a mirrored version of the Glass interface-- everything she saw, we were able to see as well.

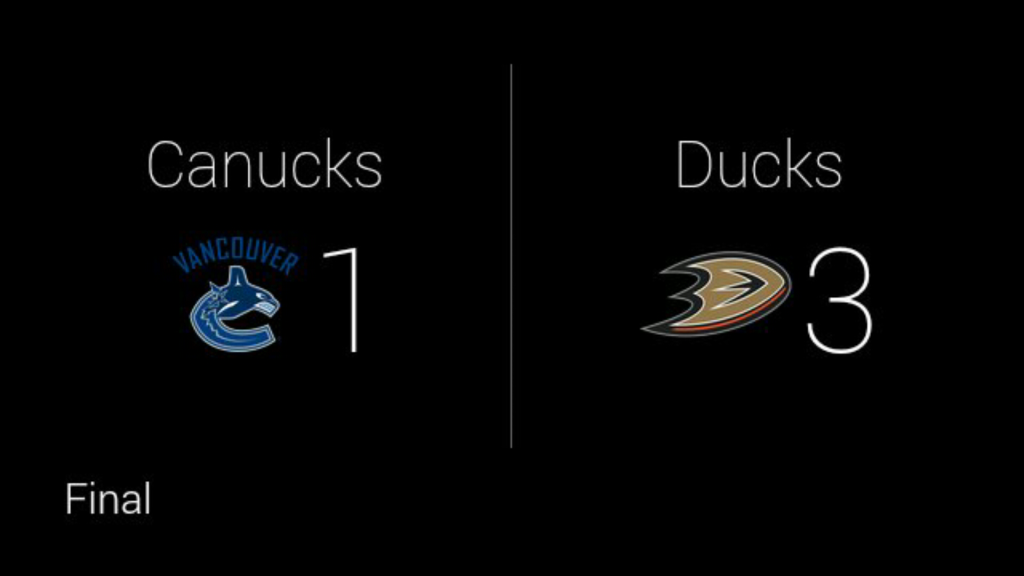

At first, she pulled up sports scores for the Mariners baseball game using the voice interface and showed simple features such as the Timeline. Near the end of the demo, in what seemed to be a shock her audience, the spoke a command-- "Ok Glass, Take a Picture." Immediately, the photo popped up on her Glass display, and in turn was mirrored onto the tablet for us to see. Several individuals were taken aback, surprised by the lack of time to get ready for the photo.

We were then ushered over to a dark corner of the room, and were all provided with white pairs of Google Glass for ourselves to try. After putting the glasses on and adjusting them slightly, I tapped the touch-sensitive panel on the right side of my head and a floating ghost of a display appeared in the corner of my vision.

At first, I was slightly confused-- in all my past experiences, I've never had to think about how to focus on something. After I looked up and to the right, however, the display became clear and in focus.

"1:21 PM"

"ok glass"

After the device was woken up with a tap, there were only two pieces of information displayed-- the first being the current time. Just below the thin, white timestamp was simply the words "ok glass" in quotation marks.

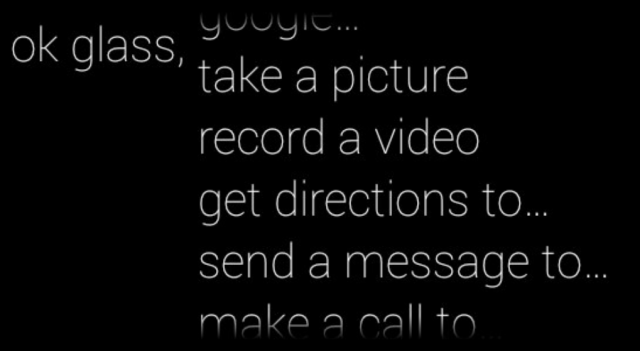

"Ok, Glass," I said.

When you pick up a Pebble smart watch, it immediately has a sense of purpose. Similarly, a Nest has a place and function on your wall-- you know what to do with it. Though modern smart devices have capabilities beyond their traditional counterparts, they always have a sense of purpose-- even it that is to simply display the time.

But with Google Glass, I paused. I didn't know what to do. On my face sat a $1,500 set of computerized glasses-- connected to the internet and one of the largest knowledge engines in the world, none the less, and I couldn't summon up a simple query. I had been overcome with a feeling of blankness-- there wasn't an obvious use for Google Glass, in my mind.

I quickly swiped down on the frame, a "back" gesture that powered the display off again.

Once again, I said, "Ok Glass." But this time, I managed to eek out a simple--if forced--question: "Google, what is the height of the Space Needle?"

The device, with its relatively mechanical voice, returned the answer-- 605 feet.

At that point, Glass felt familiar: the voice was the same used in Google Now, as well as Google's other voice-enabled products. The concept of speaking to your glasses was still alien to me, yet the familiarity of Google Glass's response made it seem like another extension of myself in the same way as my phone always had been.

I tried another query-- "Ok Glass, Google, how far is it from Seattle to San Diego?"

This time, instead of the "Knowledge Graph" card displayed in response to my last query, the glasses popped up with a Google Maps card-- showing walking directions from Seattle to San Diego. While it answered my question (it takes some 412 hours across 1,200 miles, in case you're wondering), the exact response wasn't quite what I was looking for.

I tried taking several photos and sharing them on Google+--a process that was relatively streamlined given the lack of a traditional interface--as well as swiping through past "Cards" that previous demo-ers had summoned in the hours before I arrived. The timeline was filled with several different queries and apps, one of which was CNN. Curious, I tapped on the frame as a news story about Malaysia Air Flight 370 was on screen, and the still photo was brought into motion.

This, admittedly, was one of the demonstrations that awestruck me the most. I felt like some sort of cyborg, able to watch breaking news stories on a virtual screen floating in front of my face. The sound was muddied, and though audible, not high quality. While it was passable in the exhibition room, even with the various conversations going on around me, I am not convinced it would have been loud enough to hear over the noise at a bus or train station.

Having played with the CNN story enough, I once again rattled my brain to think of features to try. Eventually, I settled on simply looking up a historical event. I was brought to a minimalistic list of web search results, though I didn't anticipate I would be able to do much with them.

To my surprise, tapping on a result brought up the mobile Wikipedia page in a full web browser. Sliding my fingers around on the frame manipulated the page. Zooming and panning around was relatively natural feeling, though I could not figure out how to click on links.

With the basics of Glass under my belt, I proceeded to the opposite side of the room-- a slightly brighter, more lively corner decorated with guitars and stereo sets. Along with the acoustic equipment was another table-- this time, with several sets of black Google Glass.

A similar routine to that at the first demonstration area ensued, though with one difference-- the Google staff member pulled out a set of odd looking headphones from out of sight, and plugged them into the micro-USB port on the glasses.

With this newest pair of Google Glass once again on my face, I woke it up and asked it to play "Imagine Dragons." Hooked up to Google Play Music All Access, I was able to command the device to play any song I could imagine-- all with my voice.

There are several inherent flaws with listening to music on Glass, however. First, because there is no 3.5mm headphone jack, there is an unfortunate lack of quality headphones. I own a pair of Klipsch x10 earbuds-- certainly not a set of custom in ear monitors that cost half a grand--but leaps and bounds better than the headphones that are included with your phone or iPod.

The earbuds I was given at the event were specifically designed for use with Glass. Not only because of the micro-USB connector, but the length of one earbud was shorter than the other. This was necessary because the distance from the micro-USB port to your right ear is only several inches, whereas the cable leading to your left ear is significantly longer. Normal headphone cords would simply dangle around your right ear.

Like Apple's EarPods, they had a funny shape designed to project sound into your ear. Also like Apple's headphones, to my dismay, the sound quality was relatively mediocre. It was a step up from the bone conduction speaker that's embedded into the glasses frames, but it's not an impressive feat admittedly.

If you listen to major artists, whether it be Imagine Dragons, Kanye West, or Lady Gaga, you'd have no issues with Google Glass. However, some obscure artists would sometimes fail to be recognized by the voice recognition. For example, it took four or five tries for my Glass to recognize "listen to Sir Sly." Instead of playing the desired artist, Glass would misunderstand me and often attempt to look up an artist named "Siri fly."

As I stood there attempting to enunciate the word "sir" to the best of my ability, it was clear that the technology was fair from ready. It's awkward enough to dictate your music choices out loud, but it's even worse if you have to do it repeatedly. Given the number of odd looks I received from those at the event, imagine the reaction of the people around you if you were riding a bus or train.

Eventually, my frustration overcame my initial awe, and I moved to the final corner of the room.

When I walked in I had noticed this particular setup, though without a clue what it was for. There were several boxes, varying in size, with signs on them in some foreign language-- some artistic exhibit, I imagined. But as I made my trek through the swarms of Google employees swiping their temples on their own set of Google Glass, I realized what the subject of the next demonstration was.

The final booth had the most colorful Google Glass frames of all: a bright, traffic cone-orange. Perhaps it was indicative of the exciting demonstration that was to follow.

With the glasses on, the Google employee instructed me to utter a single voice command:

"Ok Glass, translate this."

Instantly, an app launched on the screen with a viewfinder similar to that of a camera. Essentially, it appeared like Glass provided a picture-in-picture experience. I walked over to an inconspicuous, white board.

"il futuro è qui," read the sign.

In an instant, where the Italian once was, Glass replaced it with the words, "The future is here." No kidding.

The concept of in-place translation is not new. In fact, it's existed for several years on other platforms, such as the Word Lens app on iPhone. The impressive part of the demo wasn't the fact that the translation could be done in place, but rather the fact that the it was the glasses I was wearing doing the translation, and it was projecting the text onto a prism that seemingly hovered in front of me.

I wandered around the demonstration area and looked at each sign, thinking about how useful the technology would have been on my recent trip to Thailand.

After several more minutes, I made my way over to the back of the room where three inconspicuous looking wooden columns had been labeled "Photo Booth." Alongside the columns was another set of tables with two racks of Google Glass: one with lenses, one without, and in four color choices.

After posing for the camera, the friendly Google employee manning the booth printed the photo out and handed it to me.

Having visited all three of the themed demo stations, I collected my belongings, received a poster, and headed back into the Seattle cold. Without Google Glass, I felt oddly primal holding only my cell phone-- having just witnessed one of the more impressive technological demonstrations of the last few years, a handheld device no longer felt adequate. I wanted more than just the ability to retrieve information-- I wanted to summon it.

Glass is an impressive device, though it would be wrong to call it a product. The hardware has the polish-- it's sturdy and lighter than I anticipated-- though it lacks in sex appeal. Glass, to be blunt, looks like a device that belongs in a science fiction movie, not something you'd expect someone to be walking around with in downtown Seattle.

The voice interface is your primary method of input, yet it lacks the accuracy of your fingers. You may find yourself repeating commands often, and if you don't know the pronunciation of a restaurant or venue, you're out of luck entirely. And even if the voice commands do work correctly, you'll likely look around and catch a brief glimpse of the cold glare from strangers sitting next to you. Voice commands may be ideal when you need a hands-free way to convert cups to fluid ounces in your kitchen, but not to check the latest sports scores while you're riding the bus home.

Google has a winner on their hands-- maybe not in its current form, but Glass represents a category of devices that will flood the market in the next several years. As a society, we're always looking for an easier and more intuitive way to consume information, and wearable electronics let us do just that in an inconspicuous manner.

When Glass is launched to the public later this year, we can only hope the current $1500 asking price is lowered dramatically. Especially with the high mental barrier of entry and the "nerd" stereotype emanated by Glass, Google needs to hit a price point of $200 or less to reach beyond their core audience of technophiles.

Even if Glass is only adopted by enthusiasts, this is not necessarily a bad omen, nor does it spell the end of the product. Rather, it should be taken as a sign that Glass is still not quite ready for the general public-- either stylistically or economically.

Google isn't primarily in the hardware business and its livlihood doesn't depend on Glass. They have the freedom and resources to turn the glasses, or wearable electronics in general, into a mainstream product. After all, imagine what sort of data they could glean from the public if every man, woman, and child in the world had an additional few sensors on their body.

I, for one, look forward to a future in which every device I own is networked-- the Internet of Things pushed to the extreme, and Google's "Seattle Through Glass" event only made me even more excited.

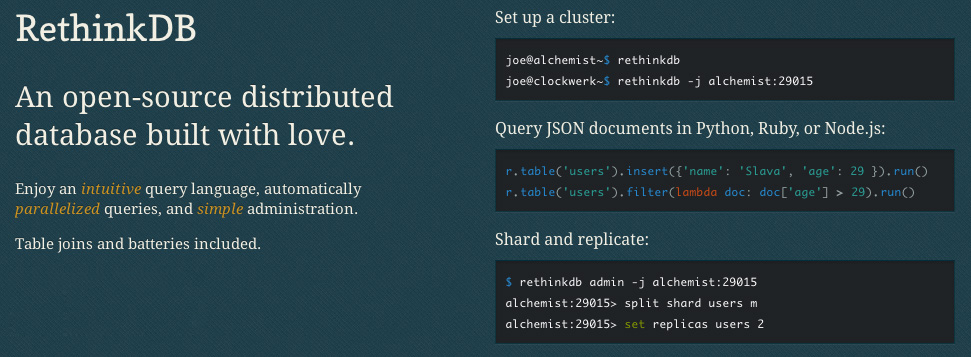

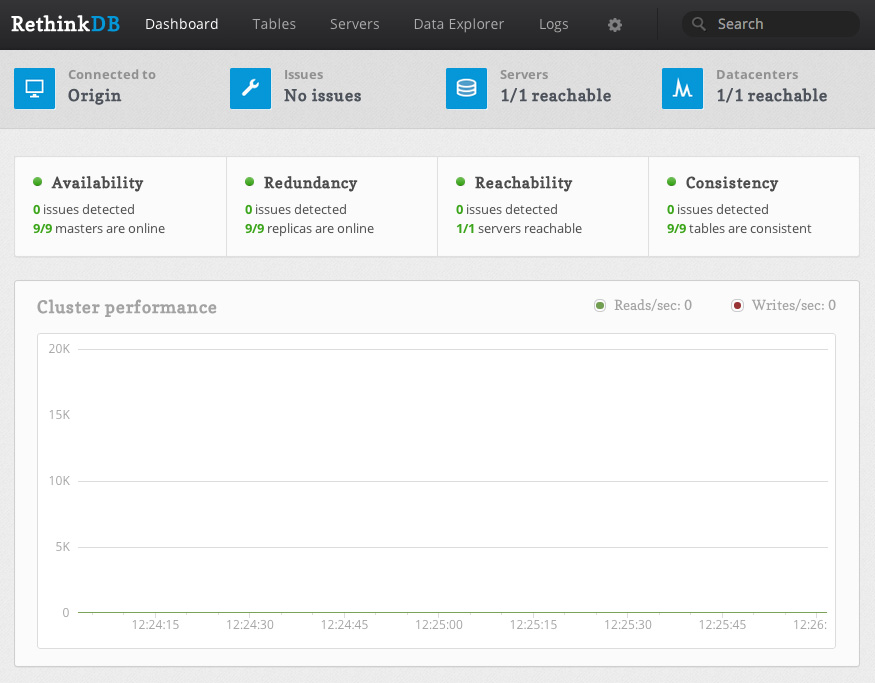

RethinkDB is a distributed document-store database that is focused on easy of administration and clustering. RethinkDB also features functionality such as map-reduce, sharding, multi-datacenter functionality, and distributed queries. Though the database is relatively new, it has been funded and is moving quickly to add new features and a Long Term Support release.

One major issue still remains with RethinkDB, however-- it's relatively difficult to secure properly unless you have security group or virtual network functionality from your hosting provider (a la Amazon Web Services Virtual Private Cloud, security groups, etc.). For example, RethinkDB's web administration interface is completely unsecured when exposed to the public Internet, and the clustering port does not have any authentication mechanisms. Essentially, this means that if you have an exposed installation of RethinkDB, anyone can join your database cluster and run arbitrary queries.

DigitalOcean, a great startup VPS provider, is a cheap means of trying out RethinkDB for yourself. The one issue is, they currently do not provide any easy way of securing clusters of RethinkDB instances. Unlike Amazon's security groups, which allow you to restrict traffic between specific instances, every DigitalOcean VPS can talk to each other over the private network1. Essentially, this would allow any DigitalOcean VPS in the data center to attach itself to your RethinkDB cluster, which is less than ideal.

Because of this, DigitalOcean is not a great host to run a cluster on if you're looking to get up and running quickly. There are ways around this, such as running a VPN (especially a mesh VPN like tinc) or manually adding each RethinkDB's IP address to your iptables rules, but this is a much more complicated setup than using another host that has proper security groups.

However, this doesn't mean that DigitalOcean is a bad host for your RethinkDB database-- especially if you're looking to try out the database or if you're just running a single node (which is fine for many different applications). In this tutorial, we'll go over how to properly setup a RethinkDB node and configure iptables to secure access to the database and web administration interface on DigitalOcean specifically, however this tutorial applies to any VPS or Dedicated Server provider.

Launching a Droplet

The first step you want to take is to sign up for DigitalOcean. If you sign up from this link, you will receive $10 in credit for free. This is enough to run a 512 MB droplet for two months, or a 1 GB RAM droplet for a single month2.

After registering, log into your account and create a new droplet3 on the dashboard. Enter a hostname, choose an instance size4, select the region closest to you5 for the lowest latency, and choose an operating system. For now, "Ubuntu 13.10 x64" or "Ubuntu 13.04 x64" are good choices unless you have another preference. If you wish to use an SSH key for authentication (which is highly recommended), select which key you'd like preinstalled on your Droplet. After you've selected all of the options you'd like to use, click the large "Create Droplet" button at the bottom of the screen.

Installing RethinkDB

Once your instance is launched, you're taken to a screen containing your server's IP address. Go ahead and SSH into it with either the root password emailed to you or with your SSH key if you've selected that option. You should be taken to the console for your freshly launched Ubuntu instance.

To actually install RethinkDB, you'll need to add the RethinkDB Personal Package Archive (PPA) with the command sudo add-apt-repository ppa:rethinkdb/ppa6.

Next, update your apt sources with sudo apt-get update, and then install the RethinkDB package with sudo apt-get install rethinkdb.

Configuring RethinkDB

As of now, you could run the command rethinkdb, and RethinkDB would start up and create a data file in your current directory. The problem is, RethinkDB does not startup on boot by default and is not configured properly for long term use.

To configure RethinkDB, we'll use a configuration file that tells RethinkDB how to run the database. Go ahead and copy the sample configuration into the correct directory, and then edit it:

sudo cp /etc/rethinkdb/default.conf.sample /etc/rethinkdb/instances.d/instance1.conf

sudo nano /etc/rethinkdb/instances.d/instance1.conf

Note that there are two commands above-- if there is a line break inside of the first command, ensure you copy and paste (or type out) the whole thing. This will open up the "nano" editor, though you can substitute this with any other editor you have installed on your VPS.

RethinkDB Configuration Options

The sample configuration file, as of RethinkDB v0.11.3, is included below for reference:

#

# RethinkDB instance configuration sample

#

# - Give this file the extension .conf and put it in /etc/rethinkdb/instances.d in order to enable it.

# - See http://www.rethinkdb.com/docs/guides/startup/ for the complete documentation

# - Uncomment an option to change its value.

#

###############################

RethinkDB configuration

###############################

Process options

User and group used to run rethinkdb

Command line default: do not change user or group

Init script default: rethinkdb user and group

runuser=rethinkdb

rungroup=rethinkdb

Stash the pid in this file when the process is running

Command line default: none

Init script default: /var/run/rethinkdb//pid_file (where is the name of this config file without the extension)

pid-file=/var/run/rethinkdb/rethinkdb.pid

File path options

Directory to store data and metadata

Command line default: ./rethinkdb_data

Init script default: /var/lib/rethinkdb// (where is the name of this file without the extension)

directory=/var/lib/rethinkdb/default

Log file options

Default: /log_file

log-file=/var/log/rethinkdb

Network options

Address of local interfaces to listen on when accepting connections

May be 'all' or an IP address, loopback addresses are enabled by default

Default: all local addresses

bind=127.0.0.1

The port for rethinkdb protocol for client drivers

Default: 28015 + port-offset

driver-port=28015

The port for receiving connections from other nodes

Default: 29015 + port-offset

cluster-port=29015

The host:port of a node that rethinkdb will connect to

This option can be specified multiple times.

Default: none

join=example.com:29015

All ports used locally will have this value added

Default: 0

port-offset=0

Web options

Port for the http admin console

Default: 8080 + port-offset

http-port=8080

CPU options

The number of cores to use

Default: total number of cores of the CPU

cores=2

bind

There are a couple of important entries we need to look at. First of all, is the bind address. By default, RethinkDB will only bind on the local IP address 127.0.0.1. This means that nothing outside of the machine the RethinkDB server is running on can access the data, join the cluster, or see the web admin UI. This is useful for testing, but in a production environment where the database is running on a different physical server than the application code, we'll need to change this.

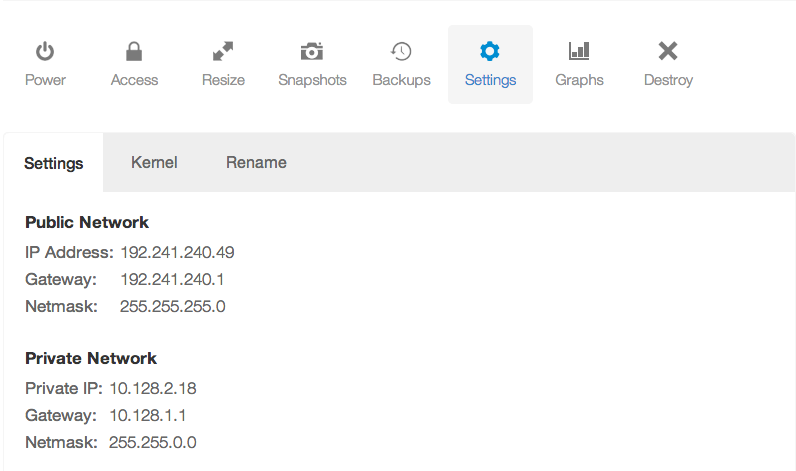

If you've launched an instance in a data center than supports private networking, you can change the bind option to your private IP address7 to start with. For example, if my private IP address is 10.128.2.18, you could use that value for the bind option. Also, make sure you remove the leading hash "#" symbol. This will uncomment the line and make the configuration active. If you want your database to be accessible to the public Internet, you may use your public IP address. Note that there are security ramifications of exposing your RethinkDB instance to the Internet, though we'll address them a little later.

If you wish to bind to all IP addresses-- including public IP addresses--you can use 0.0.0.0.

driver-port, cluster-port

The driver and cluster port options generally should not be modified unless you have a reason to do so. Modifying the ports just so that someone may not "guess" which ports you're using for the RethinkDB instance is not secure-- always assume that someone will find which ports you've configured, and secure your machine appropriately.

http-port

This option configures which port the HTTP administration UI will be accessible on. As with the driver-port and cluster-port, you can change this if the port is already in use by another service.

However, note that the admin UI is not secured in any way. Anyone with access to the admin panel can edit and delete machines from your cluster, and create, edit, and delete database tables and records. However, the admin UI will only be available on the bind address you've configured, so if you've left your bind address as 127.0.0.1, you will only be able to access the admin UI directly from the machine running RethinkDB.

join

The join address will not be used in this lesson, though this option configures which hostname or IP address and port your RethinkDB instance will attempt to join to form a cluster.

Once you've configured all of the options appropriately, you can save the configuration file and start the RethinkDB service:

sudo /etc/init.d/rethinkdb restart

Securing RethinkDB

Now you have RethinkDB running on your server, but it is completely unsecured if your bind address is anything but 127.0.0.1 or another non-accessible IP address. We need to do a couple of things:

- Restrict access to the cluster port so that no other machines can connect to the cluster

- Restrict access to the HTTP admin web UI so that malicious parties cannot access it

- Secure the client driver port so that RethinkDB requires an authentication key, and optionally restrict the port to only allow a specific set of IP addresses to connect

Using iptables to Deny Access to Ports

One method of restricting access to a specific port is through the use of iptables. To block traffic to a specific port, we can use the command:

iptables -A INPUT -p tcp --destination-port $PORT -j DROP

Simply change $PORT to the specific port you'd like to drop traffic for. For example to deny access to the cluster port (since we're not building a cluster of RethinkDB instances), we can use the command:

iptables -A INPUT -p tcp --destination-port 29015 -j DROP

This is assuming that you have not changed the default cluster communication port of 29015. Simply modify the above command to read the same as the "cluster-port" configuration entry if necessary.

Now, we'd also like to deny all traffic to the web administration interface that's located on port 8080. We can do this in a similar manner:

iptables -A INPUT -p tcp --destination-port 8080 -j DROP

However, this command denies access to the web administration UI for everyone-- including yourself. There are three primary ways we can allow you to access the web UI, from most secure to least secure--

- Use an SSH tunnel to access the interface

- Use a reverse proxy to add a username and password prompt to access the interface

- Drop traffic on port 8080 for all IP addresses except your own

Accessing the web administration UI through a SSH tunnel

To access the web administration UI through an SSH tunnel, you can use the following set of commands.

First, we must make the administration UI accessible on localhost. Because we dropped all traffic to the port 8080, we want to ensure that traffic from the local machine is allowed to port 8080.

sudo iptables -I INPUT -s 127.0.0.1 -p tcp --dport 8080 -j ACCEPT

The above command does one thing-- it inserts a rule, before the DROP everything rule, to always accept traffic to port 8080 from the source 127.0.0.1-- the local machine. This will allow us to tunnel into the machine and access the web interface.

Next, we need to actually setup the tunnel on your local machine. This should not be typed into your VPS console, but in a separate terminal window on your laptop or desktop.

ssh -L $LOCALPORT:localhost:$HTTPPORT $IPADDRESS

Replace the $LOCALPORT variable with a free port on your local machine, $HTTPPORT with the port of the administration interface, and $IPADDRESS with your VPS IP address. Additionally, if you SSH into your VPS with another username (e.g. root), you may append "$USERNAME@" before the IP address, replacing $USERNAME with the username you use to authenticate.

Once you've run the above commands, then you should be able to visit localhost:$LOCALPORT in your local web browser and see the RethinkDB web interface.

For a complete example example, the following exposes the RethinkDB administration interface on localhost:8081:

ssh -L 8081:localhost:8080 [email protected]

Using a Reverse Proxy

Because using a reverse proxy involves setting up Apache, Nginx, or some other software on your VPS, it is better to refer you to the official RethinkDB documentation on the subject. The setup steps aren't long, but out of the scope of this tutorial.

If you setup a reverse proxy, make sure you still allow local traffic to the web administration port.

sudo iptables -I INPUT -s 127.0.0.1 -p tcp --dport 8080 -j ACCEPT

Allowing Access for Your IP Address

One final method we'll go over for allowing access to the web UI from yourself is through whitelisting your IP address. This is done in a similar way to allowing local access to port 8080, except with your own IP address instead of 127.0.0.1. After finding your external IP address, you can run the following command on the VPS, replacing $IPADDRESS with the IP address:

sudo iptables -I INPUT -s $IPADDRESS -p tcp --dport 8080 -j ACCEPT

However, I would like to reiterate the insecurity of this method-- anyone with your external IP address, including those on your WiFi or home network, will have unrestricted access to your database.

Allowing Access for Client Drivers

Now that you've allowed yourself access to the web administration UI, you also need to ensure that the client drivers and your application can access the client port properly, and that the access is secured with an authentication key.

Setting an Authentication Key

First and foremost, you should set an authentication key for your database. This will require all client driver connections to present this key to your RethinkDB instance to authenticate, and allows an additional level of security.

On your VPS, you'll need to run two commands-- one to allow for local connections to the cluster port in order to run the administration command line interface, and the other to set the authentication key:

sudo iptables -I INPUT -s 127.0.0.1 -p tcp --dport 29015 -j ACCEPT

Next, we'll run the RethinkDB command line tool:

rethinkdb admin --join 127.0.0.1:29105

This will bring you into the command line administration interface for your RethinkDB instance. You can run a single command, set auth $AUTHKEY, replacing $AUTHKEY with your authentication key.

After you're done, you can type exit to leave the administration interface, or you can take a look at the RethinkDB documentation to see other commands you can run.

If you recall, at this point, the client port (by default, 28105) is still accessible from the public Internet or on whatever interfaces you've bound RethinkDB to. You can increase security to your database by blocking access (or selectively allowing access) to the client port using iptables and commands similar to those listed earlier in the tutorial.

Further Reading

Now that you've setup RethinkDB and secured it using iptables, you can access the administration UI and connect to your instance using a client driver with an authentication key. Though we've taken basic security measures to run RethinkDB on DigitalOcean, it's still recommended to take additional precautions. For example, you may wish to either use a mesh VPN such as tinc to encrypt the database traffic between your clustered instances, if you choose to expand your cluster in the future.

It's also worth reading over the fantastic RethinkDB official documentation for additional instruction on configuring your instance or cluster, or on how to use the administration interface or the ReQL query language8.

- Private networking is only supported in specific data centers at this time, including the NYC 2, AMS 2, and Singapore data centers. ↩

- DigitalOcean's pricing page has a switch that lets you see the hourly or monthly price of their servers. The monthly price is the maximum you can pay per month for that specific server, even if the number of hours in a month times the hourly price is more. For example, a 512 MB VPS is $0.007 per hour or $5 a month. The maximum days in a single month is 31, times 24 hours, times $0.007 per hour equals about $5.21. However, because the monthly price of the VPS is $5, you only pay that amount. ↩

- DigitalOcean calls their VPS servers "Droplets". This is similar to Amazon's "Instance" terminology. ↩

- I highly recommend at least using a 1 GB Droplet if you're planning on actually using RethinkDB or trying it out with large amounts of data. If you just want to check out RethinkDB and see how it works, you can start out with a 512 MB Droplet just fine. ↩

- Remember, you must select a region with private networking (such as NYC 2, AMS 2, or Singapore) if you wish to create a cluster and use the private IP address for cluster communication. This way, you're not billed for cluster traffic. However for a single node, you may choose any data center you'd like. ↩

-

Getting an error saying you do not have the command "add-apt-repository"? If you're running Ubuntu 12.10 or newer, then install it with

sudo apt-get install software-properties-common. Ubuntu versions older than 12.10 should use the commandsudo apt-get install python-software-properties. ↩ -

You can find your private IP address (or public IP address) in your DigitalOcean control panel, under the Droplet you're running, and in the settings tab:

↩

↩

- I realize this is redundant. ↩

Nowadays, it's rare that a technology direct from science fiction makes it to a household appliance before your smartphone or laptop. For example, fingerprint scanners, common in some industrial and high-security applications, finally appeared in several laptops, the Motorola Photon, and most recently the iPhone 5S. But wireless charging has been integrated into electronic toothbrushes for over a decade, and yet we've seen a minimal number of consumer devices integrated with the technology.

In 2009, Palm announced the Palm Pre smartphone based on WebOS and the Touchstone inductive charger. The phone, while not a huge success, ultimately saw the inclusion of the Touchstone wireless back cover with future iterations of the device. However once the Palm Pre faded into obscurity along with its sibling devices, the concept of built-in wireless charging faded with it.

Last year, Starbucks continued their rollout test of the Powermat wireless charging standard to several Silicon Valley stores after trialing the tech in Boston. This move is fairly controversial, given the battle between the Power Matters Alliance (the owner of the Powermat technology), the Wireless Power Consortium's Qi charging standard (pronounced "Chee"), and the Alliance for Wireless Power's Rezence. To add to the wireless-power drama, Powerkiss, a company that originally produced Qi wireless charging dongles for phones, flipped between standards entirely when it was acquired by Powermat Technologies at the beginning of 20131.

On one front, the Power Matters Alliance appears to be winning-- they have integrations with several Starbucks stores around the United States, including those from the trials in San Jose and Boston, as well as several highly trafficked areas like Madison Square Garden2. Additionally, in November, The Coffee Bean & Tea Leaf announced that their LA stores would also have integrated Duracell Powermat chargers3. While the actual number of places that Powermat technology is available is quite small in contrast to other technologies such as USB charging ports, in comparison to Qi, Powermat is the clear winner.

However, in order to use these charging hotspots, you must own a Powermat compatible device because none of the current standards are compatible. But there's a catch-- there are, as of publication, no smartphones or tablets with built in Powermat technology. This is a significant downfall of the technology and in order to use it, you must purchase an often bulky accessory to enable wireless charging. What's worse is that there are few cases on the market that do not fit phones other than those from Apple, HTC, Samsung, or Blackberry. As a bonus, the cases that are available, with the exception of those for the iPhone, are usually only manufactured for older models of phones.

As a result, even though there are several large venues with Powermat stations installed, you'll see few of the chargers in use. Additionally, those that do take advantage of the Powermat hotspots will have something in common-- they will be using a third-party case, almost certainly sold by Duracell Powermat, to have the privilege to use the technology.

Where does that leave Qi, the second large wireless power standard?

Unlike Powermat, Qi has refrained from making a big splash in the media by deploying integration trials around the world. Where Qi excels is its integrations with hardware vendors, which arguably makes the Wireless Power Consortium's standard more appealing to consumers than Powermat.

For example, Google, Nokia, and Samsung have recently released phones with Qi support or integration, including the Google Nexus 4, Nexus 5, Nexus 7, and Nokia Lumia 820. Additionally, Samsung's Galaxy Note 3 and Galaxy S4 include support for Qi inserts and backplates.

Yet, one phone remains problematic-- the Apple iPhone. Without a way to change the backplate or battery of the device, it's nearly impossible to seamlessly integrate the Qi charging standard with the phone without Apple's official support. A clever exception to this appeared on Indiegogo at the end of 2013-- the iQi. This slim adapter utilizes a thin ribbon cable to attach to the Lightning port at the bottom of the iPhone, which allows you to tuck the wireless power coil into your soft case without having extra bulk at the bottom of your device.

With one of the largest obstacles to widespread wireless charging use--Apple--nearly solved using Qi technology, why is it that Powermat still is largely considered a winner by the media in the wireless power war? The answer can be found in the format war between Toshiba's HD-DVD and Sony's Blu-ray format: marketing and integration. Unlike Toshiba, Sony had an extremely important piece of hardware that was Blu-ray compatible-- the Playstation 3 game console. On top of that, Sony's ability to produce content for the Blu-ray format made it nearly impossible for Toshiba's HD-DVD to keep pace. It was ultimately Warner Bros. dropping HD-DVD for its content that caused the HD-DVD format to die off, but only after a long TV marketing battle between Blu-ray and HD-DVD that resulted in Toshiba's standard being killed off4.

The Power Matters Alliance clearly is attempting to win the wireless charging war through sheer brute force. Placing Powermat chargers in the consumer's face through McDonalds and Starbucks has resulted in glowing press releases and the illusion that Powermat is vastly superior. It's smart marketing, playing to the dream of being able to set your phone on a table while you're getting coffee and have it charge, but ultimately it's deceiving to the consumer when this reality is years away from fruition.

Though the media primarily focuses on the war between the Power Matters Alliance and the Wireless Power Consortium, there's a third standard that has emerged out of the battleground-- the Alliance for Wireless Power, or "A4WP". Rather than focusing on electromagnetic induction, the primary method of power transfer current Qi and Powermat chargers use, A4WP's Rezence technology uses magnetic resonance to deliver power across distances. An advantage of magnetic resonance charging technology is the ability to provide remote electricity beyond a simple charging mat, potentially throughout whole rooms or venues. The idea of walking into your living room or bedroom and having your phone, still in your pocket, begin to charge is an idea straight out of science fiction. Potential future applications of magnetic resonance power transfer include lightbulbs or other small appliances that can be fixed anywhere in the room, without the need for wires leading to them. Even more exciting, for some home owners, is the prospect of having wirelessly charged smoke detectors that never run out of battery power.

However, A4WP faces the same issues present in the battle between Powermat and Qi-- adoption. In fact, A4WP's Rezence hasn't been developed into a product at all, and early-2014 is the earliest anyone will be able to get their hands on the technology. Fortunately, the smaller A4WP isn't the only standards group to consider magnetic resonance-- Qi plans to incorporate a backwards compatible magnetic resonance based charging platform in a future version of the standard5 and WiTricity's membership to the Power Matters Alliance also indicates magnetic resonance as future direction for Powermat6. The magnetic resonance technology, demonstrated at CES 2014 by the A4WP, is a vital step towards widespread adoption of wireless charging. While traditional induction chargers often require careful placement on a charging pad, the second generation of Qi mats, for example, can transmit power over larger distances and with higher efficiency-- up to 80%5.

But recent news7 indicates that the Alliance for Wireless Power and the Power Matters Alliance have signed a preliminary agreement to work with each others' technologies. This, along with PMA's adoption of WiTricity, will increase difficulties for Qi. While there were previously three competing standards, there are now two.

Ultimately, the war will be won by consumer awareness and adoption-- not technology. While Qi has a head start on integration with phones, A4WP's merger with PMA's standard may lead to interesting solutions in the marketplace. If PMA-A4WP can get a product out in the market--and soon--Qi may be dead in the water.

The Power Matters Alliance currently has over a hundred members 8, including Samsung Electronics, Duracell Powermat, BlackBerry Limited, HTC, Qualcomm, Starbucks, Sony, and others.

The Wireless Power Consotrium has over two hundred members 9, including Samsung Electronics, Energizer, Motorola Mobility, HTC, and others.

- http://www.crunchbase.com/company/powerkiss ↩

- http://www.thegarden.com/duracellpowermat.html ↩

- http://www.prnewswire.com/news-releases/duracell-powermat-launches-wireless-smartphone-charging-pilot-program-with-the-coffee-bean--tea-leaf-231558701.html ↩

- http://www.pcmag.com/article2/0,2817,2264994,00.asp ↩

- http://www.wirelesspowerconsortium.com/blog/35/resonant-qi-charger ↩ ↩

- http://www.powermatters.org/menuless/260-witricity-joins-pma ↩

- http://www.theverge.com/2014/2/11/5398066/a4wp-and-pma-merge-tech-to-win-wireless-charging-war ↩

- http://www.powermatters.org/members ↩

- http://www.wirelesspowerconsortium.com/member-list/ ↩

The past week I've been busy with a small project of mine that I've been planning on getting off the ground since March of last year-- Jekyll Themes. Jekyll Themes is a repository for authors to list themes and pre-built templates for the Jekyll static site generator.

While I've previously written about how to create a Jekyll website from scratch, a lot of developers or bloggers don't necessarily want to spend the time designing or creating a website from a blank canvas. Thankfully, there are a lot of great themes out there, but many of theme are spread throughout individual GitHub pages and projects. Hopefully, with Jekyll Themes, the themes scattered across the internet can be consolidated into a single listing where they are tagged by their color scheme, responsive-ness, or other attributes.

Kraken is a web service designed to perform a similar function to desktop based applications such as ImageOptim. For as little as $7 a month (for half-a-gigabyte of images processed a month), you can have Kraken.io process your images and compress them. Alternatively, you can use their free web service by uploading files individually. The service works significantly faster than ImageOptim because of the powerful servers that they use to crunch your images.

But, how does it compare to the desktop equivalent?

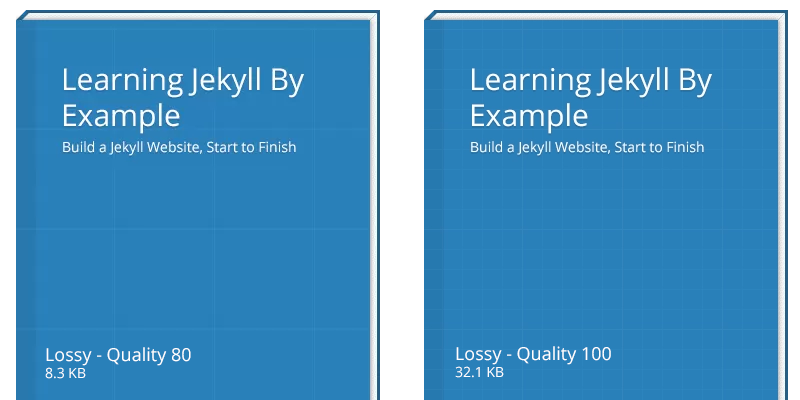

The quick answer is, "it depends." Minimally, Kraken's optimization is nearly as good as ImageOptim, or equal to it. For example, Kraken configured with "Lossless" settings saved an average of 34% off of the original file size off of several PNG images, whereas ImageOptim saved 35.1%. The difference is relatively minor.

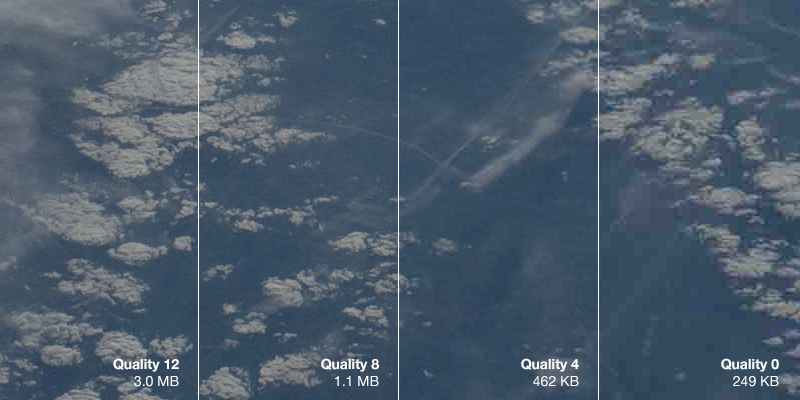

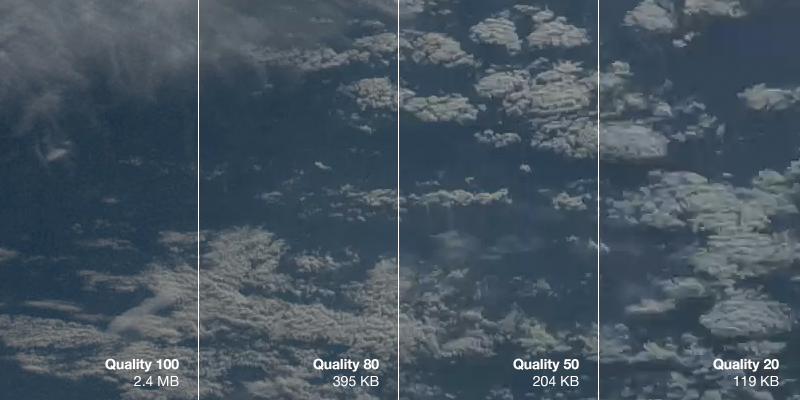

In the JPG arena, Kraken initially appears to perform significantly better than ImageOptim, saving 47% of the original file size versus ImageOptim's 34%. However, Kraken obtains these savings by reducing the image quality setting of the JPG. Conversely, ImageOptim has a maximum quality setting, but will not reduce the quality beyond this user set number. Instead, ImageOptim performs optimizations with the file format itself to achieve a lossless compression. This is similar in behavior to Kraken's ability to losslessly compress JPG files.

For example, the following two images were compressed by Kraken's web service-- one losslessly, one with lossy compression. As you can see, Kraken actually does a very good job with its compression in this case and does not introduce any significant artifacts into the image, despite the 73% savings over the original image.

|

|

| 380kb ~21% Savings |

120kb ~74% Savings |

To the casual observer, there is virtually no difference between the two images above. The most noticeable area where artifacts are introduced is around the lights hanging across the road, though it is a relatively minor side effect to shaving off nearly 3/4 of the original file size. You can see this yourself by clicking on the images above and opening the full sized images in new browser tabs, zooming in, and then switching back and forth between tabs.

However, this isn't necessarily true for all images. For example, the following Nike Fuelband+ SE press images have been run through Kraken-- both with lossless and lossy compression.

|

|

| 47kb ~4% Savings |

16kb ~67% Savings |

The lossy compressed image has a significant number of artifacts around the face of the band, resulting in an image that does not look optimal. Interestingly, the lossless compressed image from Kraken is the same size as the image produced by ImageOptim-- likely because Kraken's using the same optimization backend as ImageOptim, with some additional fine tuning.

A point to note is that Kraken does not accept images above 1mb in size for the free web interface, or above 8mb for any of their paid plans. This may be an issue when you're attempting to compress photographs straight off of camera, or large PNG infographics and such. You will have to shrink the files by hand locally prior to using the Kraken web service. In contrast, desktop based apps such as ImageOptim have no limitation on the file size in practice.

Another primary issue with Kraken is the lack of ability to change quality settings. This is especially a problem with lossy compression, where the quality of the image is degraded. It'd be ideal to have a slider to determine what quality to file size ratio is desired.

Kraken's paid plans have several additional features over the free web interface, such as the ability to optimize images by pasting a URL, as well as scan an entire website and optimize all images. Additionally, Pro users have the ability to save images in their Kraken Cloud Storage account.

In conclusion, Kraken is a great web service to replace existing desktop apps such as ImageOptim. Pro users also have the neat ability to use the API, so that images can be optimized on a website's deployment. As long as you're happy with the default settings Kraken provides, however, it's a great service and will help you maintain high performance on your own website.

When I first started my blog, I used Tumblr. I didn't choose it for the social integration or community, but rather to offload the management of servers to a third party.

My decision was justified when one of my posts, Captchas Are Becoming Ridiculous, hit the top spot of Hacker News. Over the course of two days, over 22,000 visitors visited my post. It's common to see the servers of front page Hacker News posts struggle or even go down entirely due to the surge of traffic, but thanks to Tumblr, my website stayed online the entire time.

But while Tumblr was resilient to sudden surges in traffic, the service has had its struggles and periodically went offline. There's several huge, day long gaps in my Analytics-- a sign I need to move to another platform.

Moving to Jekyll

Moving to Jekyll gave me the opportunity to give my entire website a facelift and bring the design of both my portfolio and blog in line. Building the new theme, which is based on Bootstrap, and learning how to use the Liquid template system and Jekyll took less than two working days.

Like before, I'm not managing my own servers. While I love Heroku, I also wanted to be able to scale and manage a sudden spike in traffic. Because this website is now static HTML, a single Heroku instance would probably have been fine, but I took the opportunity to experiment with some new technology.

Amazon S3 and CloudFront

This Jekyll site is hosted on Amazon S3 and the Amazon CloudFront CDN. As a result, it's like my website and blog is being hosted on multiple servers around the United States and Europe rather than a single instance as it would have been if I hosted with Heroku. This allows for the website to be blazing fast, no matter where my visitors are on the planet.

CloudFront has a limit of nearly 1,000 megabits per second of data transfer and 1,000 requests per second by default. If I were to need additional capacity, I could always request an increase, but at these maximum rates, I would be handling over 300 TB a data month and 2.6 billion page views. Somehow I don't think I'll ever hit that.

Performance Numbers

By moving to CloudFront, my blog received a massive performance upgrade. According to Pingdom's tool, my blog loads faster than 97% to 100% of websites, depending on the page visited.

Home Page

Prior to adding my portfolio to the home page (the images are extremely large and unoptimized: ~1.9 mb total, and I will be reducing them in the future), I was getting load times of 160ms from the Amsterdam server. Without Javascript, the load time decreased to a blistering 109ms-- literally as fast as a blink of an eye. By Pingdom's numbers, this meant my website was faster than 100% of websites. From New York, with Javascript, the website loaded in approximately 260ms. Not bad, but significantly slower.

I am currently evaluating the trade off of having jQuery and the Bootstrap Javascript file simply for the responsive collapsable menu (try resizing your window and look at the menu-- clicking the icon will toggle the menu, which is powered by jQuery). jQuery is approximately 30 kb, and I use very little of its functionality as it stands. The Bootstrap script isn't as bad, weighing in at 2 kb (I stripped everything but the collapsable plugin out). I'll likely leave it in because it will give me flexibility in the future, but I really wish Zepto worked with Bootstrap since it is a third of the size of jQuery.

With images, my page loads in approximately 370ms-- pretty good for how large the images are. It takes over 250 ms for the fastest image to download, so if optimized, I'm confident I'll be able to decrease the load time to under 250ms once again.

Blog Home Page

The blog home page has no images-- only the Elusive web font for the social media icons in the sidebar. The font weighs in at ~225 kb and adds nearly 50ms to the load time, for a total of approximately 300ms.

Blog Content Page

This is the most important page-- the majority of visitors to my website arrive from Google and land on one of these pages, so it must load quickly.

Thanks to Disqus, I'm seeing load times of 650ms, which is significantly worse than any other page on my website. Unfortunately, there's nothing I can do about this, and I feel that the ability to have a discussion is important and worth the extra load time.

Causes of Latency

The biggest cause of latency is external media, such as large photographs and the Elusive web font. To further optimize the page, I'll have to remove the Bootstrap icon set and the web font, opting for retina-resolution images for the social media buttons. To prevent additional requests, I can inline-embed these images into the CSS using base 64 encoding or use a sprite sheet.

Disqus also contributes significantly and causes over 70 different requests to an external server to load stylesheets and content. When compared to my website, which only makes 7 requests (including all web fonts and Google Analytics), you can see why the load time is significantly higher on blog articles with comments.

It's also important to note these numbers are from Pingdom's test-- a server with very high bandwidth. It will be significantly slower for those on 3G connections, but the gains will also be apparent.

Optimizations

These load times were't achieved by chance, but rather a large build process I wrote in a Makefile.

After running make, Jekyll builds the website. I use the Jekyll Asset Pipeline to combine all of my Javascript and CSS files into single, minified scripts and stylesheets. The Javascript is set to defer as to not block the page render.

After this is done, CSS is compressed using YUI and all HTML, Javascript, and CSS is GZipped.

Finally, using s3cmd, all of the GZipped content is synced to Amazon S3 with the proper headers (content-encoding as well as cache-control: maxage) and the CloudFront distribution is invalidated. Unfortunately, S3 doesn't allow for the Vary: Accept-Encoding header to be set (technically, this is right since the server doesn't actually vary GZipping based on browser capabilities), so many page speed tests will complain about this.

After the invalidation propagates, the website is then viewable with the new content.

By offloading all content processing (and GZipping) to my computer during build time versus during the actual request, as CloudFlare or another caching layer might do, we can shave off a few milliseconds.

I'm extremely happy with this new setup and it also gives me new flexibility I didn't have before with Tumblr, including the ability to enhance some of my articles with cool media and custom, per article layouts (a la The Verge). When I complete one of these articles, I'll be sure to do another writeup showing how it's done.

While I've previously gone over development environments using Vagrant and Puppet, recent advancements in LXC container management (see: Docker) and applications that have popped up using this technology have made deploying to staging or production environments easier-- and cheaper.

Heroku, a fantastic platform that allows developers to focus on code rather than server management, has spoiled many with its easy git push deployment mechanism. With a command in the terminal, your application is pushed to Heroku's platform, built into what is known as a "slug", and deployed onto a scalable infrastructure that can handle large spikes of web traffic.

The problem with Heroku is its cost-- while a single "Dyno" per application, which is equivalent to a virtual machine running your code-- is free, scaling past a single instance costs approximately $35 a month. Each Dyno only includes half a gigabyte of RAM as well, which is minuscule compared to the cost-equivalent virtual machine from a number of other providers. For example, Amazon EC2 has a "Micro" instance with 0.615 gigabytes of RAM for approximately $15 a month, while $40 a month on Digital Ocean would net you a virtual machine with 4 gigabytes of RAM. But, with Heroku, you pay for their fantastic platform and management tools, as well as their quick response time to platform related downtime-- certainly an amazing value for peace of mind.

But, if you're only deploying "hobby" applications or prefer to manage your own infrastructure, there's a couple of options to emulate a Heroku-like experience.

Docker, LXC, and Containers

If you've been following any sort of developer news site, such as Hacker News, you've likely seen "Docker" mentioned quite a few times. Docker is a management system for LXC containers, a feature of Linux kernels to separate processes and applications from one another in a lightweight manner.

Containers are very similar to virtual machines in that they provide security and isolation between different logical groups of processes or applications. Just as a hosting provider may separate different customers into different virtual machines, Docker allows system administrators and developers to create multiple applications on a single server (or virtual server) that cannot interfere with each other's files, memory, or processor usage. LXC containers and the Docker management tool provide methods to limit RAM and CPU usage per container.

Additionally, Docker allows for developers to export packages containing an application's code and dependencies in a single .tar file. This package can be imported into any system running Docker, allowing easy portability between physical machines and different environments.

Dokku and Deployment

Containers may be handy for separation of processes, but Docker alone does not allow for easy Heroku-like deployment. This is where platforms such as Dokku, Flynn, and others come in. Flynn aims to be a complete Heroku replacement, including scaling and router support, but is not currently available for use outside of a developer preview. Conversely, Dokku's goal is to create a simple "mini-Heroku" environment that only emulates the core features of Heroku's platform. But, for many, the recreation of Heroku's git push deployment and basic Buildpack support is enough. Additionally, Dokku implements a simple router that allows you to use custom domain names or subdomains for each of your applications.

Digital Ocean is a great cloud hosting provider that has recently gained significant traction. Their support is great and often responds within minutes, and their management interface is simple and powerful. Starting at $5 per month, you can rent a virtual machine with half a gigabyte of RAM and 20 gigabytes of solid state drive (SSD) space. For small, personal projects, Digital Ocean is a great provider to use. Larger virtual machines for production usage are also reasonably priced, with pricing based on the amount of RAM included in the virtual machine.

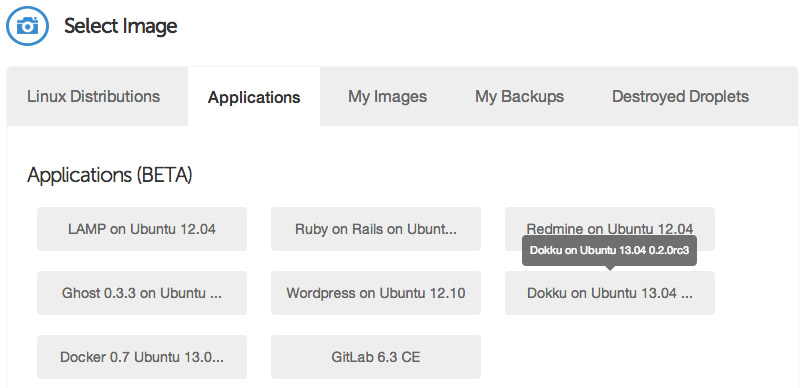

Another reason why Digital Ocean is great for Docker and Dokku is due to their provided pre-built virtual machine images. Both Dokku 0.2.0-rc3 and Docker 0.7.0 images are provided as of this publication, and in less than a minute, you can have a ready-to-go Dokku virtual machine running.

If you don't already have a Digital Ocean account, you can get $10 in free credit to try it out through this link. That's enough for two months of the 512 MB RAM droplet, or a single month with the 1 GB RAM droplet.

Setting Up the Server

After you've logged into Digital Ocean, create a new Droplet of any size you wish. The 512 MB instance is large enough for smaller projects and can even support multiple applications running at once, though you may need to enable swap space to prevent out-of-memory errors. The 1 GB Droplet is better for larger projects and runs only $10 per month. If you are simply experimenting, you only pay for the hours you use the instance (e.g. $0.007 an hour for the 512 MB Droplet), and Digital Ocean regularly provides promotional credit for new users on their Twitter account. If you follow this tutorial and shut down the instance immediately afterwards, it may cost you as little as two cents. You can choose any Droplet region you wish-- preferably one that is close to you or your visitors for the lowest latency. Digital Ocean currently has two New York, two Amsterdam, and one San Francisco datacenter, with Singapore coming online in the near future. Droplets cost the same in each region, unlike Amazon or other providers.

Under the "Select Image" header on the Droplet creation page, switch to the "Applications" tab and choose the Dokku image on Ubuntu 13.04. This image has Dokku already setup for you and only requires a single step to begin pushing applications to it.

Select your SSH key (if you haven't already set one up, you will need to do so before launching your Droplet), and then hit the big "Create Droplet" button at the bottom of the page. You should see a progress bar fill up, and in approximately one minute, you'll be taken to a new screen with your Droplet's information (such as IP address).

Take the IP address, and copy and paste it into a browser window. You'll see a screen popup with your SSH public key, as well as some information pertaining to the hostname of your Dokku instance. If you specified a fully qualified domain name (e.g. apps.example.com) as your Droplet's hostname when you created it, the domain will be automatically detected and pre-filled in the setup screen. If this is the case, you can just check the "use virtualhost naming" checkbox and hit "Finish" and continue to setup your DNS.

However, if you entered a hostname that is not a fully qualified domain name (e.g. apps-example), you'll just see your IP address in the Hostname text box. Enter the fully qualified domain name that you'll use for your server, select the "virtualhost naming" checkbox, and click "Finish Setup". For example, if you want your applications to be accessible under the domain apps.example.com, you would enter apps.example.com in the "Hostname" field. Then, when you push an app named "website", you will be able to navigate to website.apps.example.com to access it. You'll be able to setup custom domains per-app later (e.g. have www.andrewmunsell.com show the application from website.apps.example.com).

In any case, you'll be redirected to the Dokku Readme file on GitHub. You should take a minute to read through it, but otherwise you've finished the setup of Dokku.

DNS Setup

Once your Droplet is running, you must setup your DNS records to properly access Dokku and your applications. To use Dokku with hostname-based apps (i.e. not an IP address/port combination), your DNS provider must support wildcard DNS entires. Amazon Route 53 is a relatively cheap solution that supports wildcard DNS entires (approximate cost of $6 per year), while Cloudflare (free) is another.

To properly resolve hostnames to your Droplet running Dokku, two A DNS records must be set:

A [Hostname] [Droplet IP address]

A *.[Hostname] [Droplet IP address]

For example, if your Droplet is running with a hostname of apps.example.com and you wish to use apps under *.apps.example.com, you would use the following DNS records:

A apps.example.com [Droplet IP address]

A *.apps.example.com [Droplet IP address]

For more information on DNS, see various resources available to you on the Internet, including Cloudflare's documentation on the subject.

Deploying Code

Dokku allows for many of your existing apps, including those built for Heroku, to immediately run on your own Dokku instance. Dokku uses a package called a "Buildpack" to define how your application is packaged for deployment. For example, the PHP Buildpack defines behavior to pull down compiled versions of Apache, PHP, and other dependencies, and perform basic setup to run a PHP application. Similarly, the Node.js Buildpack retrieves dependencies by fetching the Node.js binary, NPM, and all of your application's dependencies as defined in package.json.

To illustrate how Dokku works, we'll create a simple Node.js application that defines dependencies and responds to HTTP requests with "Hello, World!"

Create a new directory on your computer with the following files and contents:

/package.json

{

"name": "dokku-demo-application",

"version": "1.0.0",

"private": true,

"engines": {

"node": ">=0.10.0",

"npm": ">=1.3"

},

"dependencies": {

"express": "~3.0"

}

}

/server.js

var PORT = process.ENV.PORT || 8080;

var express = require("express");

var app = express();

app.use(app.router);

app.use(express.static(__dirname + "/public"));

app.get("/", function(req, res){

res.send("Hello, World!");

});

app.listen(PORT);

/Procfile

web: node server.js

This is a simple example Express application that creates a single HTTP GET route--the root directory /--and responds with a single phrase. As you can see, this Dokku application's structure mirrors Heroku's requirements. The Procfile defines a single "web" command to be started. All Dokku Buildpacks will normally ignore other process types defined in the Procfile.

After you've created the files, create a Git repository using a Git GUI such as Tower or SourceTree, or the command line, and commit the previously created files. You'll also need to define a remote repository-- your Dokku instance. For example, if your Dokku instance was hosted at apps.example.com, you would define an remote of [email protected]:app-name. You can modify the app-name as desired, as this will correspond to the subdomain that your application will be served from.

Once you've added the remote, push your local master branch to the remote's master. If everything is setup correctly, you'll see a log streaming in that indicates Dokku's current task. Behind the scenes, Dokku creates a new Docker container and runs the Buildpack's compilation steps to build a Docker image of your application. If the build succeeds, your application is deployed into a new container and you will are provided with a URL to access your application at. In this example, the application would be accessible at http://app-name.apps.example.com/ and will display "Hello, World!"

Using a Custom Domain

While your application is accessible at the subdomain provided to you after your application is deployed, you may also want to use a custom domain for your application (e.g. api.example.com). You can do this in two ways-- use the fully qualified domain name desired for your application's repository name, or edit the generated nginx.conf file on your Dokku server to include your domain name.

The first method is quite simple-- instead of pushing your repository to [email protected]:app-name, you simply name your app based on your domain. For example: [email protected]:api.example.com.

Alternatively, you can SSH into your Dokku instance using the IP address or hostname and the root user to modify the nginx.conf file for your application. Once you're SSH-ed into your instance, simply change directories to /home/dokku/[application name] and edit the nginx.conf file. For example, the application we pushed ("app-name") would be found at /home/dokku/app-name. To add your own domain, simply add your custom domain name to the end of the server_name line, with each domain separated by spaces. Changes to the domain in this file will not be overwritten on the next git push.

Going Further

As you can see, Dokku is an incredibly powerful platform that mimics Heroku very closely. It provides the basics needed for deploying an application easily, and allows for quick zero-downtime deployments. With a host like Digital Ocean, you can easily and cheaply host multiple applications. Careful developers can even deploy to a separate "staging" application before pushing to the production app, allowing for bugs to be caught before they're in the live environment.

In February of 2012, Nike released the Nike+ FuelBand-- a sleek, discreet wristband that tracks your everyday activities and awards you "NikeFuel points", a proprietary metric designed to consolidate different types of activities into a universal standard. With competitors such as FitBit already gone through several iterations of high-tech wearable pedometers, Nike needed to develop a device that worked well, and looked good.

The original FuelBand received mixed reviews, with many users complaining about reliability over time. Despite the hardware issues, Nike's FuelBand was a solid entry into the "Quantified Self" movement that seems to be increasing in popularity.