Text Posts

One thing I ran across when building my website with Jekyll, a static website generator, was that it restricted my ability to have dynamic content, such an HTML form and blog comments.

Disqus, a popular service that manages comments, alleviated the latter pain, however, the inability to have a simple contact form was huge. There are a couple third-party services that allow you to build forms, but many of them (such as Google Forms) are unnecessarily complex. They often embedded in iFrames or take you to their own domain to submit the form, meaning that they present visitors with a form that doesn't match the rest of the website stylistically, or are taken away from your website altogether.

To fix this, I built Formingo. Formingo is a new service that allows you to easily create HTML forms that get sent directly to your email address. It's completely free to use for up to 500 submissions a month, and there are a ton of new features coming. In fact, just today, I launched pre-verified email addresses and domains.

Create Your Formingo HTML Form in Seconds

You can literally start using Formingo in seconds. If you have an existing HTML form, simply point it to POST at the Formingo service URL and change the example email address to your own.

<form method="POST"

action="https://www.formingo.co/submit/[email protected]">

<input name="name" type="text" />

...

</form>

That's it. Once you change the action URL for your form, it'll immediately start working. You'll get all the fields in the form submitted directly to your email address.

I have several new features coming soon, including:

- Anti-spam

- Connect your forms to Google Sheets, IFTTT, and more

- View and export form responses and CSV, JSON, and XML

- View form response analytics

Let me know what you think about Formingo, and be sure to get in contact with me if you have a feature you'd like to see.

I pre-ordered the Oculus Rift, a virtual reality headset, on January 6th of this year. After following the Rift from its Kickstarter campaign to trying it in person at PAX 2014, I've been waiting for the promise of virtual reality for years. Last week, my dream finally came true-- I received one of the very first Oculus Rift "CV1" headsets. After plugging it in and launching demos that cannot be explained with words, I noticed something wrong with my PC. The CPU fan was going crazy, and the sensors were reporting abnormally high temperatures.

TLDR: EVE: Valkyrie-- a game for the Oculus Rift-- has a problem where it, occasionally, may change your power management settings at the wrong time, resulting in your processor not throttling down and potentially causing a lot of heat and noise. This was exaggerated by my old, power hungry processor, but might still affect others. You can just right to the fix if you're not interested in my thought process to diagnose the issue.

My computer is relatively old-- in fact, it's based on an OEM HP PC. My motherboard is some obscure brand I can only assume sells exclusively to HP, and my processor is a nearly-6-year-old Intel 970. Though it has 6 true cores, it often can show its age when I try to run modern software. The PC originally came with an NVIDIA GTX 580-- a top of the line GPU of its time-- but I've swapped it out with a newer NVIDIA GTX 970. The old OEM HP case was also beginning to fall apart, with the metal warped and screws rattling inside, presumably from one of the many times I've opened the computer up. I've since switched to a Fractal Design r5, which completely hides that this computer is still based on an OEM model from 2010.

Both Oculus and HTC/Valve have a recommended specification for virtual reality headset users. On the GPU front, I match the recommended specification with my NVIDIA GTX 970. But due to the "Constellation" head tracking system that Oculus uses, they have a recommended CPU model that is many years newer, more powerful, and more efficient than my aging Intel 970. This initially concerned me, but I decided to defer the potentially messy motherboard-CPU-RAM upgrade until some later time. My confidence increased once Valve released a virtual reality benchmark suite, which put my computer as a whole squarely into the "Good for VR" category. Though Valve themselves indicated that the benchmark suite didn't really represent CPU readiness, the fact that my computer could run the benchmark was still reassuring.

An Ominous Whir

I received my Oculus Rift on Thursday, March 31st, and excitedly set it up for the first time. I had pre-loaded the software onto my computer a couple of days earlier during the "launch day"1, so I was almost ready to go. Though my impressions of the Oculus Rift and the arrival of consumer ready virtual reality is enough for a post in itself, it's safe to say that I was incredibly impressed. The "CV1" was a remarkable improvement on the older development kits, and I was extremely satisfied with my purchase.

Though I was blind to it when I used the headset myself (thanks to the audio, which I admittedly was impressed with), when I demoed the headset to others I noticed a fan inside of my case kicking into high gear. I originally was unconcerned, since I realized my aging processor likely struggled with this new, demanding technology.

After an exciting day of demoing the new technology to my family and having some time to play with it myself2, I shutdown my computer for the day. When I started it back up the next day, however, I noticed something was extremely wrong: without any software running and immediately after my computer booted up, that same fan I noticed earlier was running at full force. After some investigation (read: putting my ear to my computer to find the source of the sound), I figured out that the sound was from my CPU fan running at 100%.

Diagnosing the Problem

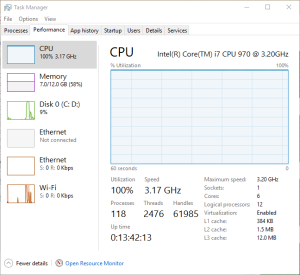

The first thing to check was obviously the built in Task Manager. My initial thought was that there was some rogue process running my CPU into the ground, and though Task Manager indicated my CPU was at 100% load, there weren't any processes that were obvious culprits. Chrome had occasional spikes into the 50%-usage territory, but it wasn't anything out of the ordinary.

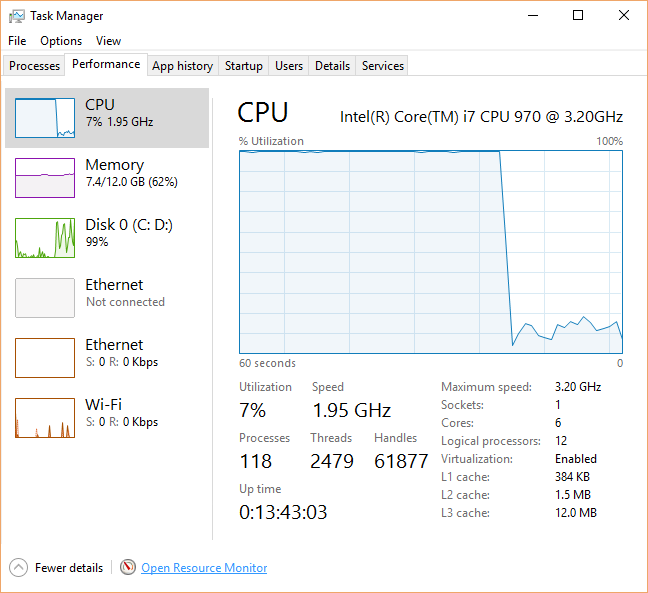

When I switched to the "performance" tab, I noticed something odd. The CPU graph indicated 100% usage, and my processor was running at the full 3.3 GHz without throttling down.

When I switched to the "performance" tab, I noticed something odd. The CPU graph indicated 100% usage, and my processor was running at the full 3.3 GHz without throttling down.

One thing to note with recent Intel processors is their ability to throttle down under low loads. This feature-- called EIST, or SpeedStep-- changes the CPU multiplier to reduce the clock speed, and as a result the power usage and heat generated. This is a good thing under normal circumstances since less heat is generated, and therefore the life of your processor is increased.

On a normal PC, if you look at the "performance" tab of Task Manager you will see the "Speed" number fluctuate. At high loads it likely will reach at or above your processor's clock speed3, but when sitting in Chrome or checking your email, you will likely see this number drop to a fraction of the normal speed. For example, my Intel 970 runs at 3.2 GHz, though I regularly see it drop to 1.57 GHz.

On a normal PC, if you look at the "performance" tab of Task Manager you will see the "Speed" number fluctuate. At high loads it likely will reach at or above your processor's clock speed3, but when sitting in Chrome or checking your email, you will likely see this number drop to a fraction of the normal speed. For example, my Intel 970 runs at 3.2 GHz, though I regularly see it drop to 1.57 GHz.

However, this throttling didn't occur, and the utilization remained at a constant "100%". I looked at the "details" tab of Task Manager-- which shows all processes, including the System Idle Process-- and everything looked normal as well. In fact, if I excluded the Idle process and added up the CPU usage numbers, I arrived at a total of only 2-5% CPU usage. This was nothing like what the other sections of the Task Manager reported.

I also used the excellent "HWMonitor" program to check my temperatures. My CPU ran at approximately 80 degrees Celsius, which was the obvious cause of the fans.

Resource Monitor also reported 100% CPU usage across all 6 cores, though like the "details" tab in Task Manager, there were no processes that used more than 1-2% CPU. I thought that this might be some sort of bug, so I checked a third party task manager called "Process Explorer". It too reported that all processes used less than 1-2% total CPU usage, despite the high temperatures and obvious problem.

As a last resort, I performed a full scan of my computer with both Windows Defender and MalwareBytes, hoping that it wasn't the result of a virus or rootkit. Scans revealed nothing, to my relief.

At this point, I was dumbfounded. Something prevented my CPU from throttling down, yet nothing consumed more than a single percentage point of my CPU with the exception of the System Idle Process, which was normal. I thought back to when the problem started and tried to remember what I installed: nothing. Remember, I installed the Oculus software much earlier in the week. The only significant change was that I plugged in the Rift and tracking camera, but I already had unplugged those to see if their mere presence caused the CPU issue to occur. I was partially in denial-- my new toy was the only thing that had changed, yet I didn't want to accept that it was the culprit. There wasn't really any evidence to suggest this, anyhow.

As a last ditch effort, I booted into Safe Mode. This-- supposedly-- would let me know if it was third party software responsible. As my computer turned back on, I was crossing my fingers. My heart sank as I heard the fans spin back up at full force. I checked the Task Manager once again, and my fears were confirmed-- 100% CPU usage.

Out of ideas and desperate to get back into the virtual realm, I simply reformatted my computer and hoped this was the end of it.

As I reinstalled my software, I had doubts in the back of my mind. I knew that the last major change to my computer was related to the Rift, so I worried that installing the software again would bring the issue back and I would be stuck with the hard choice of letting my CPU run hot, or purchase a new and more efficient motherboard and CPU. Some hours later after the software, my drivers, and everything else I needed was back on my computer, I once again tensed up as I launched a Rift game.

Though the fans spun up while I played Lucky's Tale, shutting down the game and pulling the headset off resulted in the fans once again lowering to a low hum. It wasn't my Rift.

Or, so I thought.

The Problem Returns

The very next day, the problem relapsed. I was heartbroken-- the Oculus software and Rift games were literally the only pieces of software that I had installed, and had to be the culprit. I once again went through the steps: Task Manager, Resource Manager, Process Explorer, and HWMonitor to verify temperatures. Literally all of the same symptoms were reoccurring.

I eventually checked the power management settings built into Windows. I knew that there was a setting for "Maximum Processor State" and "Minimum Processor State", and there was a possibility those were changed somehow. However, they were normal-- 5% minimum, and 100% maximum. However, I was surprised that when I changed the "Maximum" value to 50%, the processor speed also dropped to half in the Task Manager (3.2 GHz to about 1.57 GHz), and the fans spun down. However, the "Utilization" percentage of my CPU was also locked to 50%. I suppose it made sense-- I capped the processor's speed to 50%, so now whatever was using up my entire CPU was just using all of the 50%.

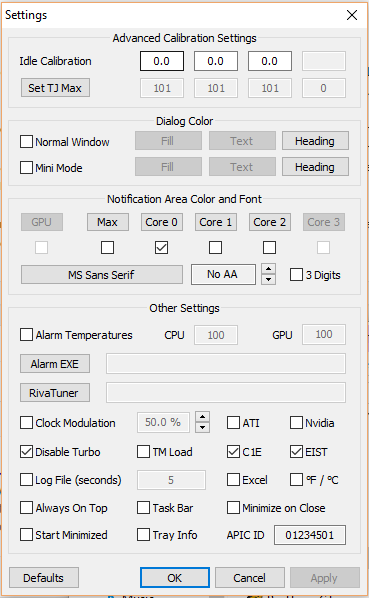

Instead of this being an issue with Windows, maybe it was a problem with Intel's EIST-- that too, could have been disabled. There's a couple programs that support showing the status of Intel EIST, including "RealTemp". However, to my dismay, EIST was enabled as far as I could tell.

Once again, I had to hunt for any leads I could. At this point, I was doubtful that there was any process on my machine that was actually using up 100% of my CPU, so I focused on power management and the throttling issue.

The Discovery and Fix

Eventually, I came upon the documentation for the Windows power management configuration. Though Windows exposes a great deal of power configuration options in the control panel, there are many others that are not exposed in the GUI, and that can be accessed through the command line tool PowerCfg4. There's one setting in particular that was of interest, nicknamed "IDLEDISABLE". The description of IDLEDISABLE was a massive clue:

IDLEDISABLE specifies if the processor idle states are disabled on the system. If disabled, the kernel spins in a loop when there is no code to execute on a processor instead of invoking a transition to an idle state.

With the fans still running at full, I queried the power management configuration of my computer:

PowerCfg /QHSure enough, the IDLEDISABLE setting was set to 0x00000001 for AC power, which is the only setting that matters for a desktop PC:

Power Setting GUID: 5d76a2ca-e8c0-402f-a133-2158492d58ad (Processor idle disable)

GUID Alias: IDLEDISABLE

Possible Setting Index: 000

Possible Setting Friendly Name: Enable idle

Possible Setting Index: 001

Possible Setting Friendly Name: Disable idle

Current AC Power Setting Index: 0x00000001

Current DC Power Setting Index: 0x00000000Flipping this back to "0" is actually fairly easy. From an administrative command prompt, you need to type two commands:

C:\WINDOWS\system32>PowerCfg /SETACVALUEINDEX SCHEME_CURRENT SUB_PROCESSOR IDLEDISABLE 000

C:\WINDOWS\system32>PowerCfg /SETACTIVE SCHEME_CURRENTThis does two things-- it sets the IDLEDISABLE property on the current, active power management scheme to a value of "0" (i.e. off), and then re-sets the power management configuration so that it loads the new values. Once I performed those two steps, my fans immediately spun back down and the Task Manager reported that everything was back to normal.

Post-Mortem

This simple, two line fix was the culmination of many hours of diagnosis, Google searches, and software updates. But, why did this even occur in the first place?

System Idle Process

First, you will need a little background on the System Idle Process. At a basic level, CPU must always be doing something. However, CPUs run a lot of different processes that are triggered at different times, and often there will be a time where there are no user processes that are running. So, what is your CPU supposed to do? This is where the "System Idle Process" comes in on Windows. The "System Idle Process" is the Windows version of a piece of code that runs when there's nothing else to do5. Essentially, the Idle process is just taking up extra CPU cycles.

This, of course, is why the Idle process is of no concern under normal circumstances. Originally, if you recall, I dismissed the Idle process in the Task Manager's "Details" tab and in Process Explorer. Though it took up 99% of the CPU time, this was normal: the Idle process would yield cycles to other user processes if needed, and it does not normally prevent a CPU from throttling down. In fact, though the Idle process was originally analogous to a while(1){} loop, modern versions of Windows actually run instructions that enable the power saving features of modern processors.

IDLEDISABLE

Of course, the above is true only if the system is allowed to idle. As you may of guessed, the IDLEDISABLE setting actively disables the throttling features of processors, causing the Idle process to revert back to a basic while loop. In Microsoft's words, "the kernel spins in a loop when there is no code to execute on a processor instead of invoking a transition to an idle state".

This, of course, explains why my system never actually felt sluggish, despite the Task Manager reporting 100% CPU usage. Though the Idle process was consuming 100% of my CPU by being in a loop, it yields to user processes, meaning that other programs on my PC could still operate at a normal level.

Oculus Rift and Latency

But, how does this all relate to the Oculus Rift?

The Rift, and virtual reality in general, is very latency sensitive. The device runs at 90 frames per second to reduce motion sickness for the user, meaning that the system only has about 11 ms to actually render a frame. That's a crazy small amount of time for a complete scene in a video game to have its physics calculated by the CPU, rendered by the GPU, and sent to the headset. Dipping below this 90 fps target also is extremely bad for motion sickness, so Oculus implements a ton of different features (such as asynchronous time warp) to help reduce latency as much as possible.

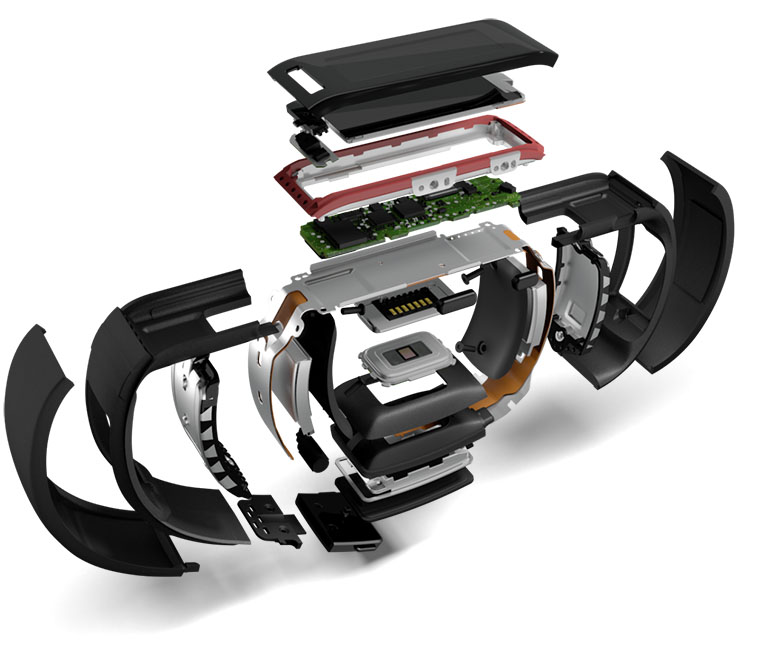

The Rift also implements both rotational and positional tracking, meaning that you can tilt your head in space to have your character in a game do the same, or you can even lean forwards, backwards, or to the side. The Rift has an inertial measurement unit (IMU) package inside of it, with an accelerometer, gyroscope, and magnetometer to determine the movement of the headset. This package runs at a much higher frequency than the similar sensors in your phone-- up to 1000 Hz (one thousand measurements per second), in fact. The Rift also sends these data points every 2 ms over the USB cable.

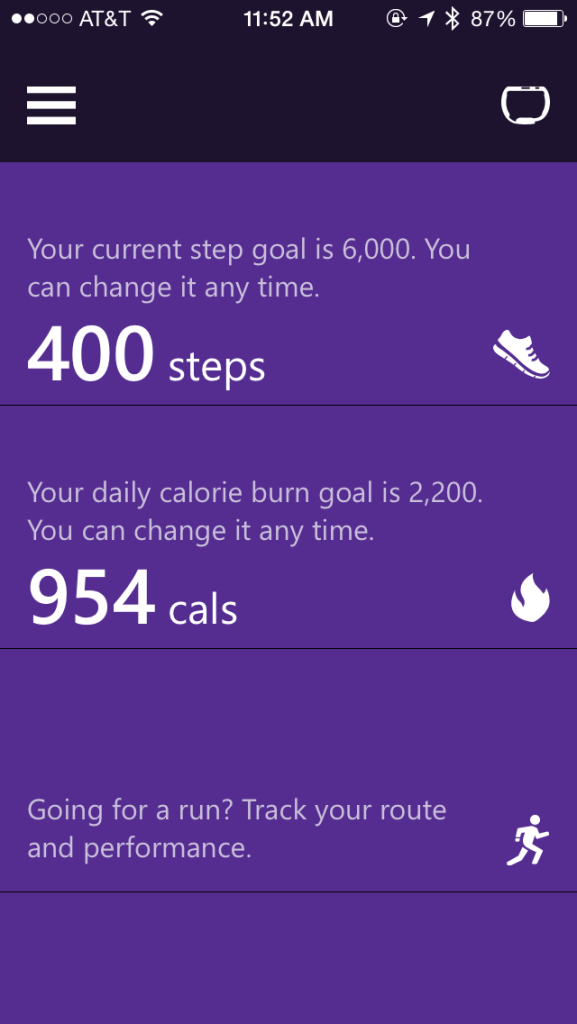

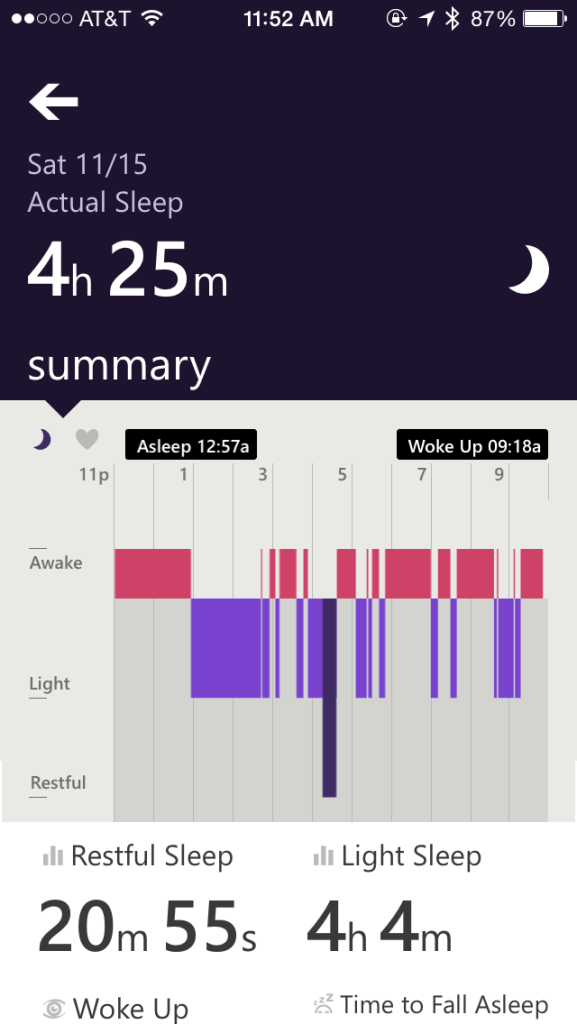

All of these innovations are needed to reduce latency as much as possible, but there's one thing that they are doing that they haven't talked about to my knowledge-- changing the power plan of your computer to "High Performance". In Windows, there's a couple default "Power Plans". These plans contain the settings-- such as the amount of idle time before your monitor turns off and your computer goes to sleep-- that govern the performance and power usage of your computer. By default, your desktop computer is set to "Balanced", which contains a mix of options that allow your computer to perform well when needed, but still save electricity. There's also a "High Performance" plan that changes characteristics of your computer to help it perform at its maximum at all times.

What you may not notice is when you actually put your headset on6, the Oculus software changes the power plan of your computer to "High Performance". This is immediately toggled back when you take your headset off. The GIF below shows me putting my finger over the sensor-- you can see the Oculus software automatically switching the power plan to "High Performance".

However, the default "High Performance" power plan in Windows actually has IDLEDISABLE set to "0", meaning that the idling features are always enabled out of the box for Windows desktops. On top of that, my issue was that the fans were kicking in because IDLEDISABLE was actually set to "1" on the default "Balanced" power plan.

It turns out, CCP (the developers of EVE: Valkyrie) intentionally set IDLEDISABLE to "1". This isn't exactly far fetched-- Intel actually has a presentation on low latency computing where they suggest that developers do just that to reduce latency as much as possible.

Though they may intend to set the flag to "1" when the game is launched and back to "0" when it is closed, if the game is closed incorrectly (such as if it crashes or is forced to close via the Task Manager, etc.), there is a potential for the IDLEDISABLE flag to remain at "1", thus causing additional heat and fan noise. This can be compounded if EVE: Valkyrie changes the IDLEDISABLE flag to "1" when the power plan is still set to "Balanced", as this is the plan that most computers will switch back to once the Oculus Rift headset is removed.

The Perfect Storm: My Old Hardware

Other than the fact that there's only a couple thousand Oculus Rift devices in the wild, why is it that no one has discovered this before?

My hardware might be an explanation: it's old, more power hungry than newer processors, has a stock CPU fan that definitely needs cleaning, and the thermal paste is probably all dried up by now. It was already running warmer than it should be, but with it running at 3.3 GHz and with IDLEDISABLE set to "1", it heated up enough to cause my loud fan to kick in. With a newer, more power efficient processor or a better CPU cooler, I might not have even noticed the issue. After all, nothing felt sluggish7.

I hope this gives some insight into the process I went through to debug this problem, and save someone time. If you're seeing the Task Manager show 100% CPU usage and your CPU clock at the maximum but without any processes that are obviously the issue, you might want to check the power management configuration of your system to see if IDLEDISABLE is set to "1" when it should be reset back to the default of "0".

This issue has been reported to EVE: Valkyrie and I will update this article as I hear back from them.

- April 4th, 2016 @ 5:40 PM PST - Reported the issue to EVE: Valkyrie support

Special thanks to Reddit user /u/GodLikeVelociraptor, who seems to have pinpointed that it is in fact EVE: Valkyrie causing the issue, not the Oculus software itself. I have updated the article to reflect this fact.

- Considering only a couple hundred to maybe one thousand Oculus Rifts-- and only to the originally Kickstarter backers-- arrived on the launch day, some may hesitate to actually consider it anything but a soft launch. By the end of that week, Oculus CEO Brendan Iribe informed customers that there was a component shortage that resulted in a delay. ↩

- Lucky's Tale, though not a game I would play on a traditional monitor, is actually quite impressive in virtual reality. You can lean in and look at everything like it's a miniature model, and you can even headbutt things in the game world thanks to the positional tracking features of the headset. ↩

- Technologies like Intel's TurboBoost will actually let your processor run above it's "normal" clock speed when it's able to within thermal limits. ↩

-

You must use an administrative command line prompt to change the

PowerCfgsettings. ↩ - A better explanation of this is provided by Gustavo Duarte. ↩

- There is a sensor on the Rift that lets it know when the headset is put on. This allows for the Oculus Home software to launch and the OLED screens to turn on, saving power and the longevity of the screens themselves by not having them be always on. ↩

- Remember, the Idle process was taking up the majority of my CPU in what was essentially a "while" loop. Though it let other software take over CPU resources when needed, it still generates heat if the processor is not allowed to throttle down and idle. ↩

Machine learning is an extremely important topic in computer science. We've come to the point where there's some problems that just cannot be solved with algorithms and code, and machine learning is the solution.

I haven't had a ton of experience with machine learning beyond Andrew Ng's amazing Machine Learning course and I recently set out to change that.

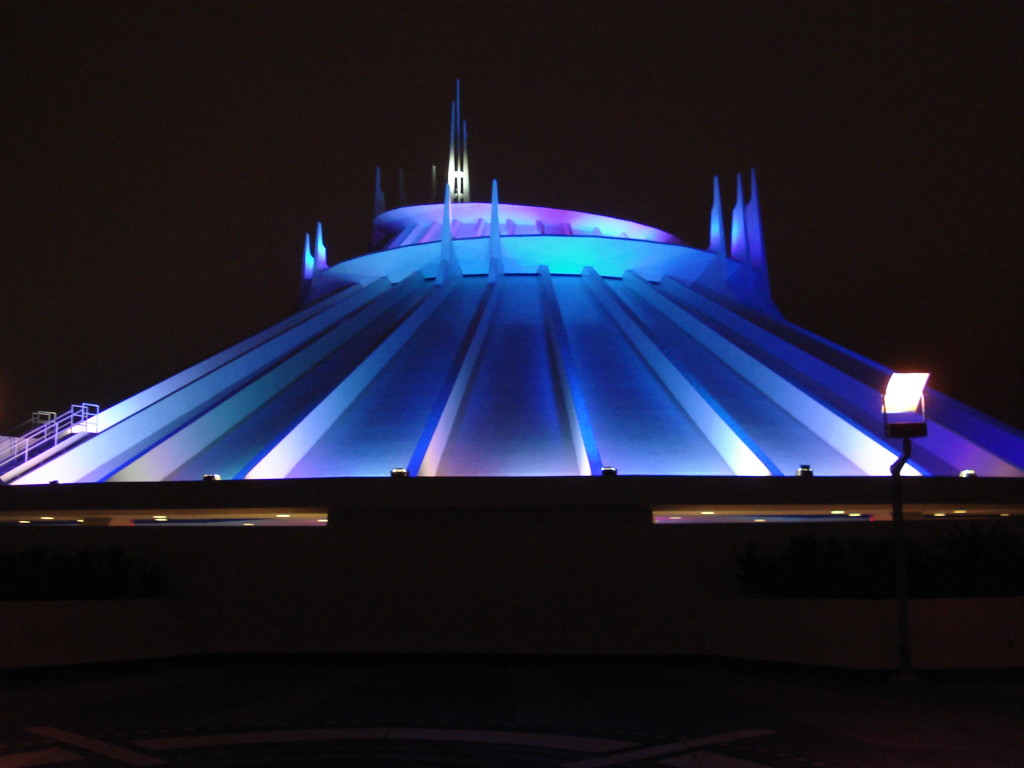

Walt Disney World

Walt Disney World parks attract tens of millions of visitors per year. In fact, Magic Kingdom, the flagship park, hosts about 20 million visitors per year by itself. Given the fixed number of attractions and their ride capacities in the thousands-of-visitors-per-hour, this can translate to extremely long wait times during peak seasons.

In general, you can predict what sorts of wait times to expect based on the day of the week and the season-- September, for example, tends to have fairly low attendance due to kids going back to school, while Thanksgiving is one of the most popular days of the year and often elicits hours-long wait times for some attractions.

Using this information, you can make a general decision on when to make your vacation. Most people don't visit Walt Disney World and stay on a strict schedule-- after all, it's supposed to be a vacation, not a drill.

But, there's a dedicated few that are committed to visiting the maximum number of attractions as possible, and a general sense of park crowded-ness is not enough.

Predicting Wait Times with Neural Networks

With my mission to learn how to use machine learning techniques in a real setting, I decided that predicting theme park wait times would be a good start. The data is available, constantly flowing, and generally in a pattern.

I had a couple of choices-- use some sort of regression model, which was the obvious choice for a job like this (use of continuous inputs to generate a continuous output), or a neural network regression model. Ultimately, for a variety of reasons, I opted to use neural networks.

Many people may believe neural networks are overkill for a simple regression problem, but my experiments with several machine learning libraries determined that it would be the easiest solution to implement and get data from, given that I was using Node.js.

With this starting point, Park Genius was born.

Model Training

Before we can glean any insights from the raw wait time data, we need to train the neural network model. Every couple of minutes, the official posted wait times are sourced from Disney directly. These numbers are artificially inflated, but they give a general sense of how long the wait will be for an attraction. User-submitted wait times are also supported and weighted more heavily to train the model, but with a user base of exactly 11, there wasn't a lot of user-submitted data to use.

Fortunately, the data is both numerous and fairly high quality. There's no real bogus data that needs to be removed, and some of the noise where a wait time will go from 60-to-90-to-60 in the course of a couple minutes is smoothed by the prediction model.

Neural networks are great and finding patterns in data by itself. However, wait time trends actually have more nuanced patterns than simply fluctuating over the course of the day. In fact, there's many "cycles" that affect wait times at theme parks:

- Time of the day. The simple one.

- Day of the year. Holidays are extremely busy compared to a normal day in the same month.

- Day of the week. Weekdays are generally calmer

- Month of the year. During peak seasons, the crowds can be much higher than during the off season.

Additionally, there's special events (such as Disney's Food and Wine Festival at Epcot's World Showcase) that draw a significant number of visitors, but don't necessarily occur on the same set of days every year.

Because of this, a single neural network didn't suffice-- my first experiments resulted in a single neural network producing somewhat reasonable wait time predictions, but could be wildly off between week days and weekends.

The breadth of these "cycles" meant that the minimal set of data I have collected was going to be an issue. Though Park Genius operates on about 65k data points across all of the attractions in Magic Kingdom (as of Feb. 24th, 2016), this is actually not enough. As previously mentioned, there are trends that go beyond the wait time fluctuations in a single day. This means that, at the moment, if you were to look at the predictions for Thanksgiving 2016 or some other holiday, Park Genius would underestimate the wait times. Unless a historical data archive can be used for training, the prediction model will not be accurate for special days, like holidays, until after they've already occurred in 2016.

Prediction Computation

I chose to make a system of neural networks that incorporated data trained using the above factors. This means that each attraction has multiple networks associated with it, which are then consulted at prediction time and combined in a weighted average.

For the current day, the model is both trained and the data re-predicted every hour. This means that as the current day goes on, the predictions for that day will be updated and made more accurate. Watching this process is actually quite fascinating because you can see the prediction lines change over the course of the day as they become more accurate.

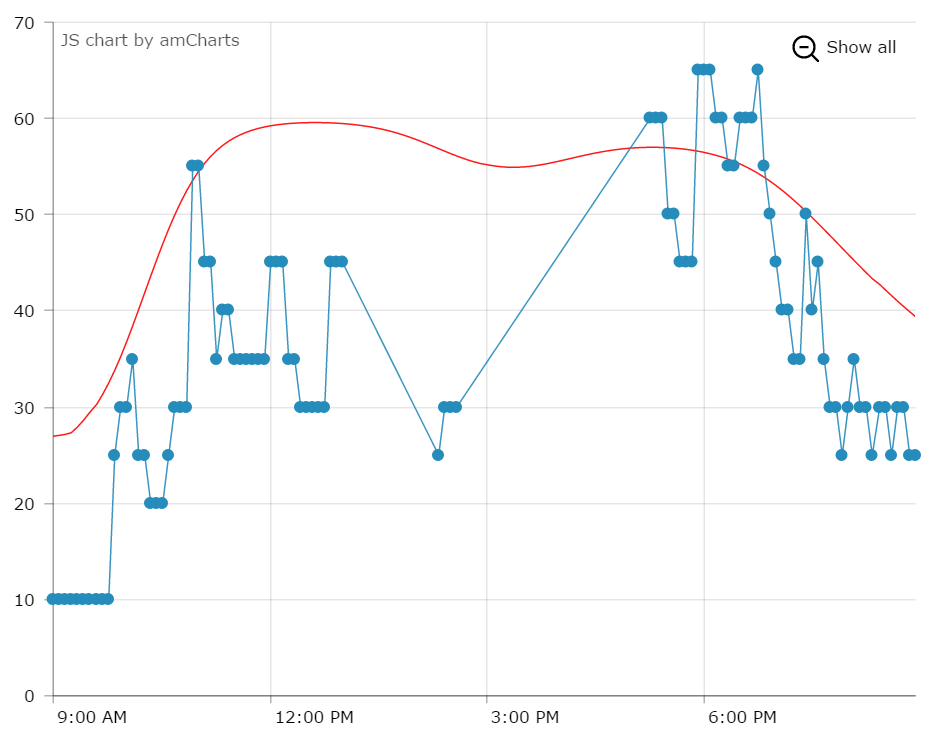

Prediction data is available on the site as a simple line graph that shows both the official wait times, as well as the predictions that Park Genius has come up with.

[caption id="attachment_764" align="alignnone" width="932"] Historical Wait Times and Predictions for Space Mountain, Feb. 23rd 2016[/caption]

Historical Wait Times and Predictions for Space Mountain, Feb. 23rd 2016[/caption]

Using the Data

Wait time predictions are fascinating, but by themselves not extremely useful. After all, a visitor to Walt Disney World will probably not be able to guess exactly when they will visit which attraction-- especially if it's their first trip.

However, this data can be used in some interesting ways, such as to build a touring plan for the theme parks. Park Genius actually tracks several different attributes of the theme parks and their attractions-- wait times and predictions, the length and intensity of the attraction, and the physical geographical location of the attraction for example.

Using this data together, I can build a touring plan for someone that wants to visit Magic Kingdom, prefers roller coasters, but wishes to skip kid-focused attractions like "Stitch's Great Escape!".

The way to solve for this "optimal", yet customized, touring plan is essentially a dynamically changing travelling salesman problem-- the weights change over the course of the day, but you still want to try and find the quickest route (in terms of travel time and waiting for attractions) between every attraction.

A preliminary version of the planning software actually generated something that is somewhat reasonable looking, and will only get better with some extra tuning.

Though the wait time prediction aspect of Park Genius was fun to implement and a great way for me to use neural networks in a practical application, I'm even more excited to actually use the data in interesting ways. Though the planner is close to completion, I have several new ideas to build on the data set I've collected and can't wait to bring them to fruition.

Be sure to checkout Park Genius and the predictions that it generates. If you're planning on visiting Magic Kingdom anytime soon, it might even be helpful for you.

- That one user, me, doesn't even live anywhere near a Disney park. ↩

The Oculus Rift is finally available for pre-order and many are having sticker shock with the $599 price tag. With tax and shipping costs (really? shipping costs on a $599 device? I suppose I might be spoiled by Amazon), it can come out to almost $700 in the United States. However, this is pretty close to the price of a high-end monitor (such as the curved 34-37 inch models), and you get a decent amount of hardware included: motion tracking, the tracking camera, Xbox controller, Oculus Remote, etc.

For more information on Needlepoint-- my JavaScript dependency injection system--you can take a look at my introductory blog post or the README file on GitHub.

I have updated Needlepoint to version 1.0.5 in NPM. There's a couple changes, none of which should break if you use an older version and upgrade to this version.

Needlepoint is now pre-compiled

This means you don't have to have an entry for the library in your Babel ignore configuration. My first blog post mentions that your Babel configuration should look something like this:

require('babel/register')({

optional: ['es7.decorators'],

// A lot of NPM modules are precompiled so Babel ignores node_modules/*

// by default, but Needlepoint is NOT pre-compiled so we change the ignore

// rules to ignore everything *except* Needlepoint.

ignore: /node_modules\/(?!needlepoint)/

});

The ignore property is no longer required.

Updated for Babel 6

I also updated the library and instructions to use Babel 6. If you are using Needlepoint with Babel 6, you will have to use the new presets property for your Babel configuration and add the babel-plugin-transform-decorators-legacy plugin. Babel 6 removed the old ES7 decorator functionality and the contributors are re-writing it. However, the legacy plugin enables the decorator functionality now.

To do so, you must install the plugin's NPM package:

$ npm install --save babel-plugin-transform-decorators-legacyThen, once you install the plugin package, you can add it to your Babel configuration:

require('babel/register')({

presets: ['es2015', 'stage-0', 'stage-1'],

plugins: ['babel-plugin-transform-decorators-legacy']

});As you can see in the sample above, I've also included the es2015, stage-0, and stage-1 presets for Babel. You can adjust these as needed for your application.

Though I am an Angular JS type of person, I regularly follow other JavaScript frameworks to keep up with the rest of the world. React, along with the Flux design pattern, is a relatively popular alternative to the Angular ecosystem. However, React+Flux is extremely different from other frameworks-- for starters, React isn't actually a full JavaScript framework by itself, and Flux isn't actually a thing: it's a type of architecture. Tero Parviainen wrote an amazing introduction to the Redux+React ecosystem with an overview of how not only the two libraries themselves work, but conceptually how they fit together and why the Flux architecture and immutability makes sense in a web application.

In the past, I've done a lot of work with PHP and the Laravel framework. One of the coolest features about Laravel is its Inversion of Control system, which dynamically injects dependencies into your application at runtime.

This is beneficial for many reasons, including modularity, readability, and testability. With a dependency injected system, you can simply request that your application receives an instance of an object, and the DI container will do all of the work to initialize the object and all of its dependencies.

Dependency injection is a relatively advanced topic, but the benefits outweigh the cost. For example, consider the following scenario:

class Config {

public function __construct() {

}

/**

* Get the configuration item with the specified key

*/

public function get($key) {

// Get the configuration value with the specified key

return $value;

}

}

class Database {

/**

* Construct a new database instance using the specified configuration.

*/

public function __construct(Config $config) {

$this->config = $config;

}

public function connect() {

$host = $this->config->get("db.host");

$name = $this->config->get("db.name");

// ... perform the rest of the database initialization

}

}

This is an extremely trivial example, but as you can see, our database object depends on the configuration. If we simply create a single instance of the database, this isn't a problem-- we can simply create a new configuration object and pass it in the constructor. But, what if our application is composed of multiple controllers, utilities, and snippets of code that all need a database instance? Suddenly, we either have to create a large number of database objects (and consequently, a large number of configuration objects), or pass a single instance around somehow.

This is the premise of dependency injection: DI takes the premise of passing instances around, and manages this behavior for you. If we apply our example above to a dependency injected system, we actually don't ever have to explicitly create a configuration object-- our DI system does this for us.

Laravel's IoC system is great-- it's wired up throughout the framework so that most method calls end up having dependencies injected automatically, and it even uses the type hints in the method signature to determine what dependencies the method has.

For example, Laravel's IoC system would use the following method signature to pass in the current HTTP request object and a database instance, automatically:

public function getUsersController(Request $request, Database $db)The DI pattern doesn't just apply to PHP and Laravel-- Javascript frameworks such as Angular and Aurelia have their own dependency injection systems, with the latter even using ES7-style decorators. Unfortunately, these systems are tightly coupled with the frameworks, meaning that they aren't very useful for developers that want to use them with Node.JS.

Needlepoint: Dependency Injection for Modern Javascript

Needlepoint is a new DI framework for Javascript environments that supports the latest ES6 and ES7 features. Everything works with ES6 classes, and the dependency injection parameters are defined using the decorators ES7 proposal.

Need to learn how to use the new ES6 and ES7 features? Check out my in-development course on Modern JavaScript.

Declaring Dependencies of a Class

There's two key decorators that indicate how a class should be dependency injected. First is the @dependencies decorator:

@dependencies(DependencyOne, DependencyTwo)

export default class ClassWithDependencies {Simple pass in the classes to the decorator, and the dependencies will be injected into the constructor:

constructor(instanceOfDependencyOne, instanceOfDependencyTwo)Declaring a Singleton

The second decorator is used to declare a class a singleton:

@singleton

export default class SingletonClass {The singleton decorator indicates that no more than one instance of the class should be created in the application. If two classes declare a singleton as a dependency, only a single instance will be created which will be injected into the relevant classes that declared the singleton as a dependency.

The best way to introduce the library is to simply illustrate how it works:

/* config.js */

import {singleton} from 'needlepoint';

@singleton

export default class Config {

constructor() {

}

/**

* Get a configuration value for the specified key

*/

get(key) {

return this._data[key];

}

}

/* database.js */

import {dependencies, singleton} from 'needlepoint';

@dependencies(Config)

@singleton

export default class Database {

constructor(config) {

this.config = config;

this.configureDatabase();

}

configureDatabase() {

// ... configure the database with the current configuration instance

}

query(q) {

// ... Perform the specified query

}

}

/* index.js */

import {container} from 'needlepoint';

import Database from './database';

var db = container.resolve(Database);

db.query("SELECT * FROM users");

This example is very similar to the PHP example we previously had-- a database needs a configuration object to configure itself. As you can see, in our index.js file we have a single call to container.resolve(Database) which does all of the magic: the database instance is created and passed in an instance of the configuration, with both being singletons. If we were to call container.resolve(Database) again, we would receive the exact same instance that was created the first time.

The same is true for the configuration object-- only a single instance is created for the entire application, so we might add a new "queue" class that uses the same configuration instance:

import {dependencies, singleton} from 'needlepoint';

@dependencies(Config)

@singleton

export default class Queue {

constructor(config) {

this.config = config;

this.configureQueue();

}

configureQueue() {

// ... connect to the queue and configure it with the currently

// initialized configuration instance.

}

next() {

// ... get the next item from the queue

}

}

Now, we can run container.resolve(Queue) in our index file and get an instance of the queue class. Of course, since this is the first time that the queue is resolved (either explicitly or as a dependency of another object), it is actually instantiated. However, the constructor is passed in the previously created configuration object-- the same exact instance that was passed to the database.

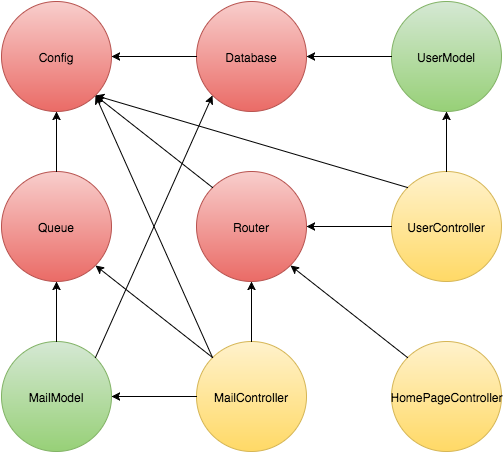

Of course, this also works with more complex dependency graphs. Image having an application with a dependency graph that looks something like this:

Wiring that up manually and keeping track of everything would be a nightmare (and this isn't even close the complexity of a real application), but with Needlepoint you can simply define each class's dependencies with the decorator.

To find out more about to use Needlepoint and dependency injection in your Javascript applications, you can visit the GitHub repository for the project. The entire thing is open source and written using the latest ES6 features, meaning Babel is required for most Javascript environments.

You can use Needlepoint in your Node.JS applications fairly easily. Simply install it with NPM and ensure you have Babel installed and configured:

npm install --save babel needlepointAnd, in your Javascript:

require('babel/register')({

optional: ['es7.decorators'],

// A lot of NPM modules are precompiled so Babel ignores node_modules/*

// by default, but Needlepoint is NOT pre-compiled so we change the ignore

// rules to ignore everything *except* Needlepoint.

ignore: /node_modules\/(?!needlepoint)/

});

require('app.js');

/* app.js */

import {container} from 'needlepoint';

// Use Needlepoint here

There's still a ton of things left to do, but you can always help out by submitting a pull request or even just filing an issue.

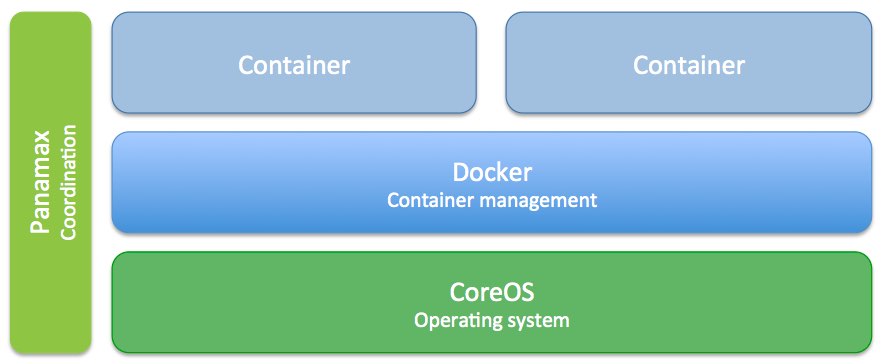

Docker is fantastic for building a scalable infrastructure. Not only does it force you to isolate your application into reasonable chunks, but it also encourages you to build these pieces as stateless services. This is fantastic for high availability and scalability, but actually scaling out a pure Docker-based infrastructure is difficult if done manually.

Docker Swarm and Compose are the "official" solutions to this problem-- they allow for you to build a giant, elastic Docker cluster that appears as a single machine to your client. Additionally, Compose allows you to scale your application easily to multiple instances.

Despite this, these two components are lacking a couple critical features-- cross-machine service discovery, as well as a built-in load balancer that distributes traffic to your scaled Docker infrastructure.

Tutum is a service that adds these remaining components, and to great success. Though you can use your own nodes with Tutum, sometimes it's desirable to use your own, self-hosted service.

Rancher is an open source Docker PaaS that includes features like service discovery and DNS, load balancing, multi-node support, cross-host networking, health checks, multi-tenancy, and more. Essentially, Rancher takes all the features of Tutum and packs it into a single Docker container that is hosted on your own nodes so that you have complete control.

Even better, Rancher is extremely easy to install in a matter of minutes.

To find out how, check out my new mini-course that I will be expanding over the next several weeks to cover new features in Rancher, as well as expand it to cover how to use advanced features such as service discovery. It's completely free, and I hope you find it useful!

I just launched a brand new, responsive, and completely free Wordpress theme. Inspired by my home-- the Pacific Northwest (of the United States)-- Northwestern is a minimalistic Wordpress theme for independent bloggers.

The theme's look can also be customized to fit your personality, with the primary colors and hero image changeable. Northwestern also supports several of the Wordpress post formats, including "aside", links, and quotes.

Go ahead and grab it for free from my website, and feel free to use it for both personal and commercial websites. More information on the license can be found on the store page.

I've been experimenting with Docker for a while, but in the last year or so there has been an influx in the number of tools that help put Docker containers into production easily. Some of these are from third party companies, such as CoreOS, and some from Docker itself (such as the new Docker Swarm, Docker Machine, and Docker Compose. However, I've recently been testing a new Docker workflow that allows me to push code, have it tested, built, and deployed into a production server cluster running Docker.

My welcome bar service, ThreeBar1, is currently deployed using a continuous integration/continuous deployment (CI/CD) system onto Heroku. In general, new code is deployed with the following workflow:

- Create new feature locally

- Push code to a git repository on a feature/development branch

- Shippable, a CI service, detects this new commit, pulls the code, and runs tests

- If the tests pass and the code is ready to be deployed to production, the a merge request is created and merged

- Shippable detects the new commit on the master branch, runs tests, and pushes the code to the Heroku git repository

- Heroku builds the application slug and deploys the application

This is really convenient for shipping features quickly and ensures that all code on the production servers is tested and working.

However, this becomes an issue at scale-- Heroku is expensive. Though you receive a month's worth of Heroku Dyno hours2 for free each month, scaling beyond a single Dyno is a minimum of $35/month for half a gigabyte of RAM. To be fair, Heroku adds quite a bit of value beyond simply providing a virtual machine, but some applications might require more flexibility than Heroku's buildpacks provide or simply require more horsepower.

Docker, of course, can replace Heroku for the actual deployment of code, but the problem of scaling still remains. Manually starting and restarting the containers when you deploy new code is certainly not ideal, so let's take a look at different services we can use in our Docker CI/CD workflow.

Finding the Pieces

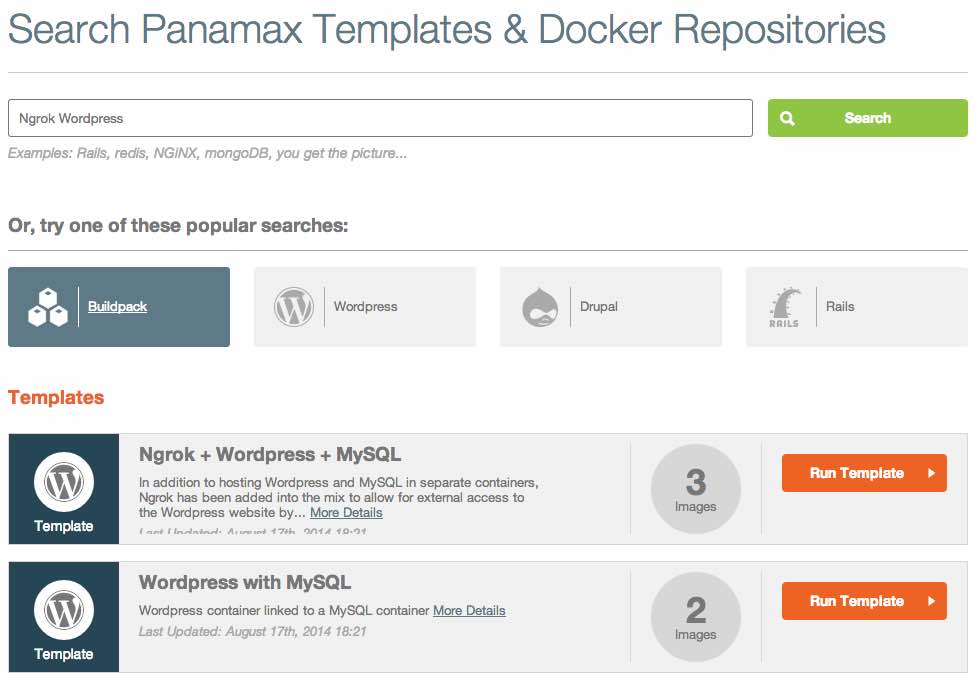

Docker doesn't have an end-to-end solution for this workflow, so we need to piece together several different technologies to get the desired result. The three primary services include: a CI/CD test runner, a server provider, and a container orchestrator.

CI/CD Server, Test Runner

When choosing a CI/CD service, you must ensure they support building Docker containers. Some services that support this include:

There are others as well (including a plugin for the well known Jenkins CI server), though you will have to do your own research. Many services run builds in a container, but this is completely separate from whether they can build a container. As you will see later, you can also run Docker-in-Docker, so you may potentially be able to use some other services that run inside of a Docker container to build your Docker container.

For my experiments, I chose the open source GitLab CI system to be used in tandem with the GitLab source control service. This way, I could see the build status of each commit in the GitLab interface. GitLab is also a good choice because they not only offer a free source code repository hosting service and hosted version of their CI software, but the software is open source so you can run it on your own servers as well.

If you do opt to use the free GitLab CI system, you will have to provide your own test runner. The hosted CI software they run only coordinates test runners-- it doesn't actually execute your test jobs. You can either launch the runners on a server provider, or you can actually run them locally on your machine (either bare metal, in a VM, or in a Docker container).

Server Hosting Provider

Of course, you also need a server hosting provider for the Docker daemon to reside on. Unfortunately, using Docker often entails running and managing your own servers. This means that you are responsible for uptime and maintenance. But, as I will show, you can run a multi-availability zone or multi-datacenter infrastructure with Docker, meaning that downtime for a single machine may not be as critical as you might think.

Common hosting provider choices include3:

- Amazon Web Services EC2

- Digital Ocean (get a free $10 credit with this link, enough for 2 months of server time)

- Vultr

- RunAbove

Orchestration

Even if you have a set of Docker containers built and a server running the Docker daemon, you still need to easily be able to launch the containers and redeploy them when a new container image is built. The orchestration service I've recently been using is called Tutum.

Right now, Tutum is a service that helps you manage your container deployment workflow. At the moment, you can add nodes dynamically from any cloud hosting provider, create new services, and deploy them from a private registry.

Additionally, Tutum creates a private network overlay for your containers, meaning that your Docker services have their own private IP addresses that magically route traffic between every other container in your Tutum account, whether the physical machines are in the same datacenter or across the world on a different provider. This allows you to create a multi-server, multi-cloud solution that is extremely resilient to failure. If you've ever seen Flannel by CoreOS before, the Tutum private network is similar.

I've been looking for this type of service for a while now. A while ago, I experimented with the tinc VPN to create a P2P mesh network between multiple Docker containers. This was long before Docker offered the level of network configuration that they do now, so it's refreshing to see Tutum do the overlay networking so that it just works.

Tutum also aims to eventually integrate native CI/CD features, as well as git-push support. When Tutum is finished with these features, it could potentially become the only other service you need besides a source code repository.

Tutum has a few key features we need for the CI/CD Docker workflow:

- A private Docker registry for container images

- Redeploy containers when a new image is pushed to the registry

- Easy container scaling (in the UI, you can scale a service from

NtoMcontainers with a single slider) - Add nodes as you need, then scale the containers in the Tutum UI

Other nice-to-have features include:

- DNS based automatic service discovery-- after scaling a web app container, for example, your haproxy router will automatically know about the new containers and route to them

- Private network overlay

- Bring-your-own-node-- you're not locked into a single, or specific, cloud vendor

You may be able to find another service that offers similar features (CoreOS + Fleet or Docker Swarm are potential candidates), but Tutum is a complete solution that's ready today.

Assembling Everything Together

I've chosen the following stack for my own personal experiments:

- Hosted GitLab for source code repository hosting

- Hosted GitLab CI for CI/CD

- RunAbove as a server provider for both CI/CD runners as well as container hosting4

- Tutum for orchestration and service management

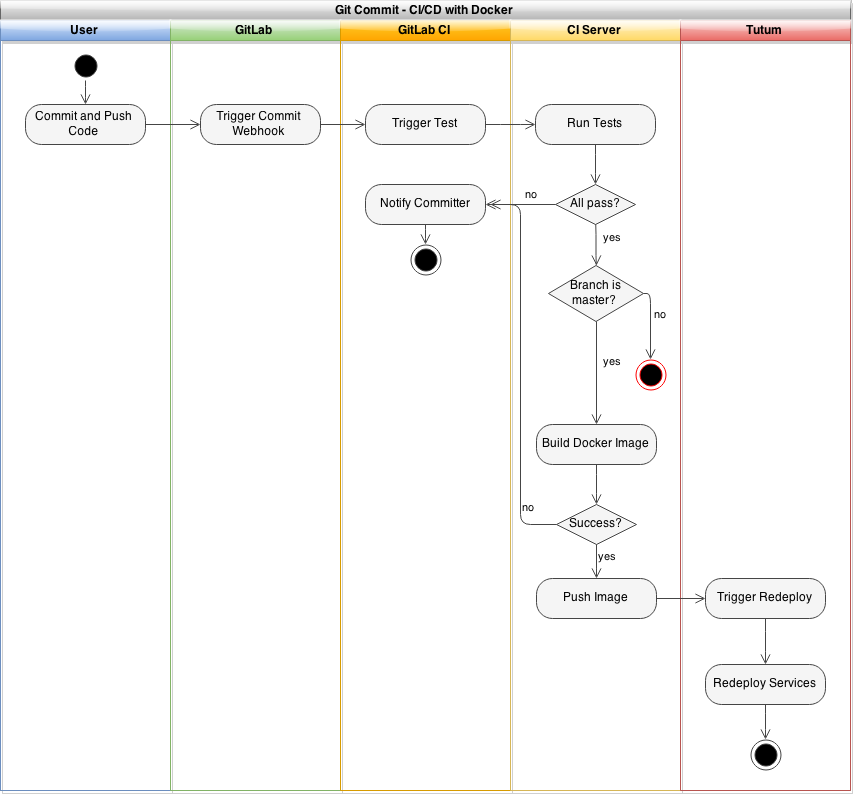

When everything is finished and assembled, a git commit should look like the following:

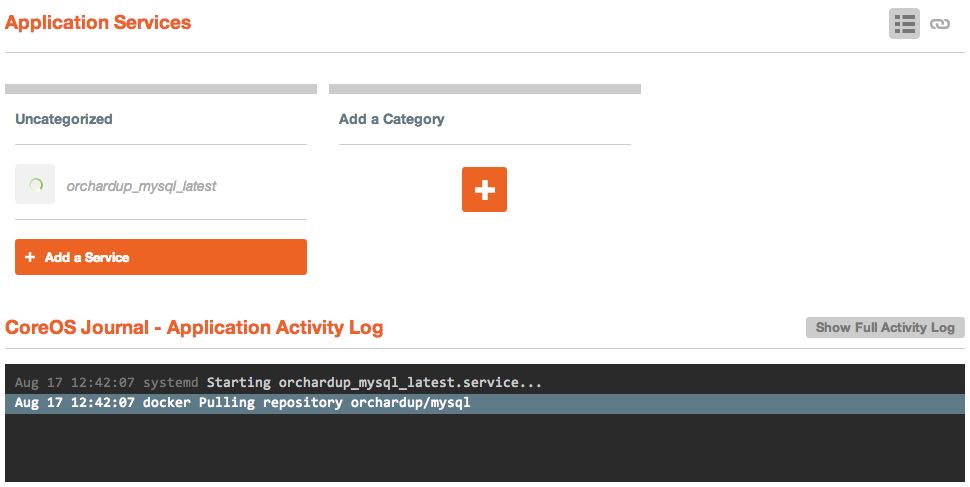

Deploying the Tutum Agent

Because you can actually use Tutum to deploy the GitLab CI runners, it's a good idea to setup the Tutum agents first. Launch all of the servers you wish to use, and then navigate to the Tutum dashboard where you'll find a "Bring your own node" button. Click this, and you'll receive a command similar to the following:

curl -Ls https://get.tutum.co/ | sudo -H sh -s XXXXXXXXXXXXXXXXXXX

Simply run this code on a node to add it to your Tutum account, and the process for each additional node you wish to add (each time, clicking the "Bring your own node" button to get a new token).

Once the agent has been installed on all of your nodes, you should see them in the dashboard. At this point, you might want to tag your nodes appropriately. This allows you to specify which nodes your services will run on. For example, you might have a set of nodes tagged as staging or production to specify an environment, or a node tagged as ci which only hosts your CI runners.

You can tag a node by clicking it's name and adding the tags in the left hand sidebar.

Deploying a GitLab CI Runner

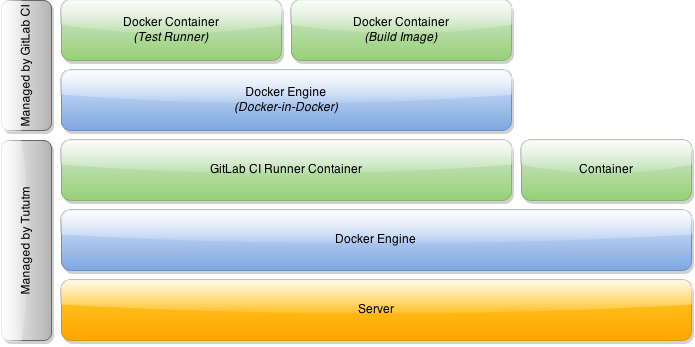

Now, you can deploy a GitLab CI runner with Tutum. However, we're going to need a special type of CI runner-- we need to be able to run Docker within this container so that we can build our own Docker images.

You may be thinking, how is this possible? Since we will be running the GitLab CI runner with Tutum, it will be within a Docker container itself. So, how do you run Docker inside of a Docker container?

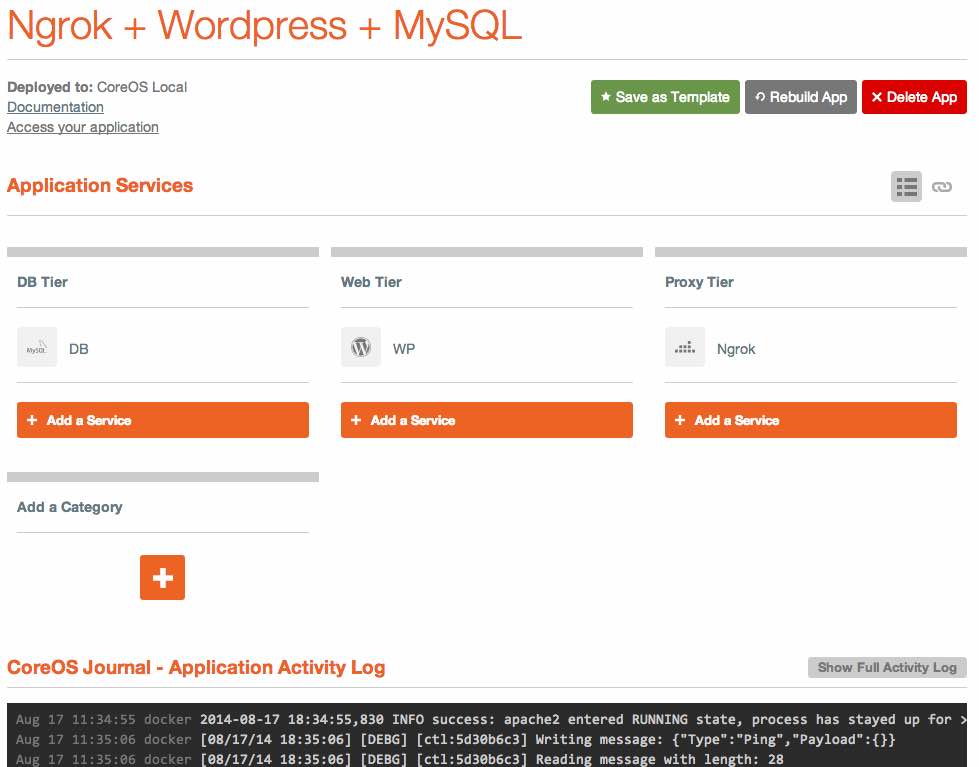

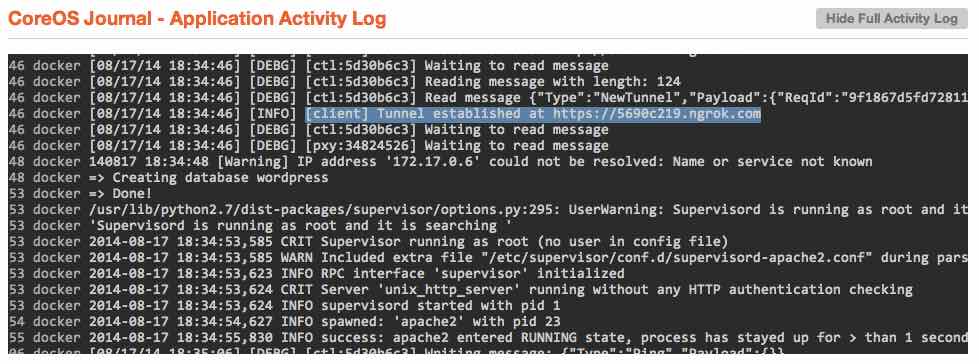

It turns out this is entirely possible to do. In fact, you can even run Docker-in-Docker-in-Docker or nest Docker as many levels deep as you wish. Our final architecture for the GitLab CI runner will look something like this:

As you can see, Tutum launches the GitLab CI runner within a Docker container on our node. Additionally, the GitLab CI runner will actually use Docker to build images and run tests, meaning we have two nested levels.

I've built in DinD functionality into a forked version of the GitLab CI Runner, available on GitHub and the official Docker Registry.

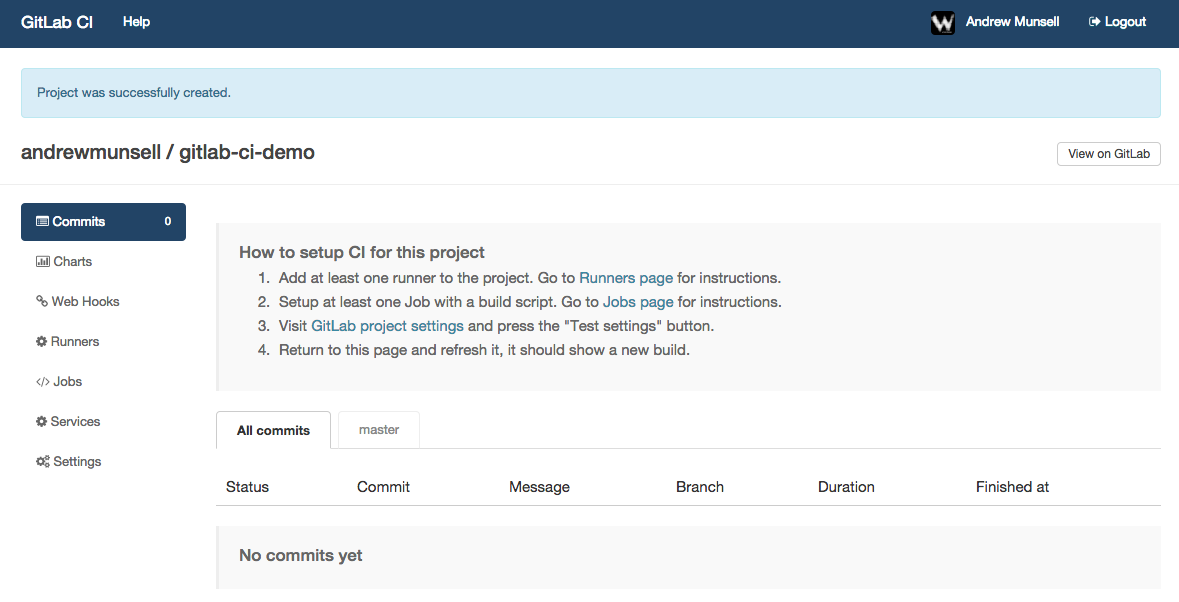

Before you setup your GitLab CI runner, ensure that you already have a repository in a GitLab instance as well as a running GitLab CI coordinator. As mentioned previously, you can either host your own instances, or you can use GitLab's free hosted repository and CI service.

Once you have a repository in GitLab, you can link your GitLab CI account with a couple clicks. After you've linked your accounts together, you'll see a list of your repositories in the GitLab CI dashboard with "Add project to CI" buttons next to them. Simply click the button next to your project to add it to GitLab CI, and you'll see your new project CI dashboard.

If you poke around the GitLab CI interface, you'll notice a menu item titled "Runners". On this page is a registration token, as well instructions to start a new runner. In our case, we'll be using Tutum to deploy our runner with the DinD GitLab CI Runner image. Make sure you copy both the registration token, as well as the GitLab CI URL-- you'll need them both in a couple minutes.

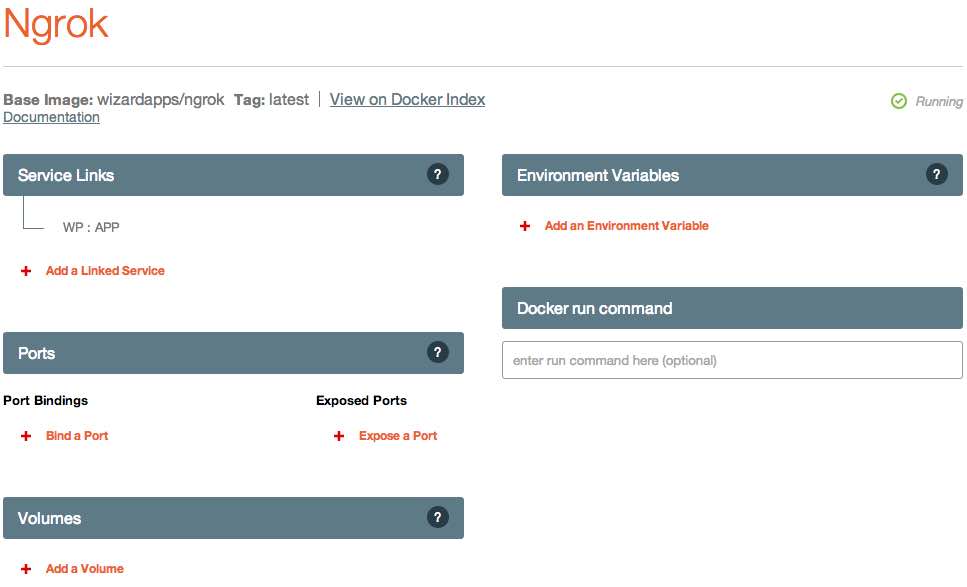

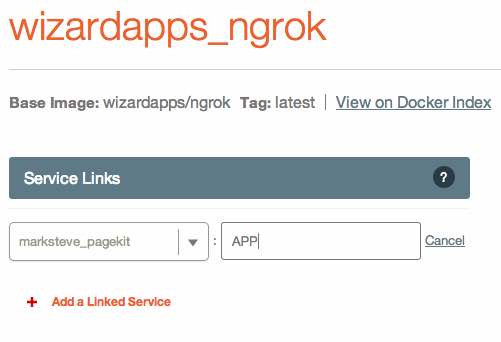

In your Tutum interface, create a new service. A service in Tutum is a logical group of Docker containers all running the same software and using the same configuration. Each service can have zero or more containers running at one time, and Tutum will orchestrate scaling and scheduling your containers across all of your nodes.

On the first screen of the launch wizard, you'll see a few tabs that let you select where your Docker image comes from. Tutum has a built-in, complementary private registry, as well as support for "featured" images and searching through other public registries, including the Docker Hub. Switch to the "Public Images" tab and search for the "wizardapps/dind-gitlab-ci-runner" image, which is the aforementioned DinD modification I have performed on the official GitLab CI Runner repository.

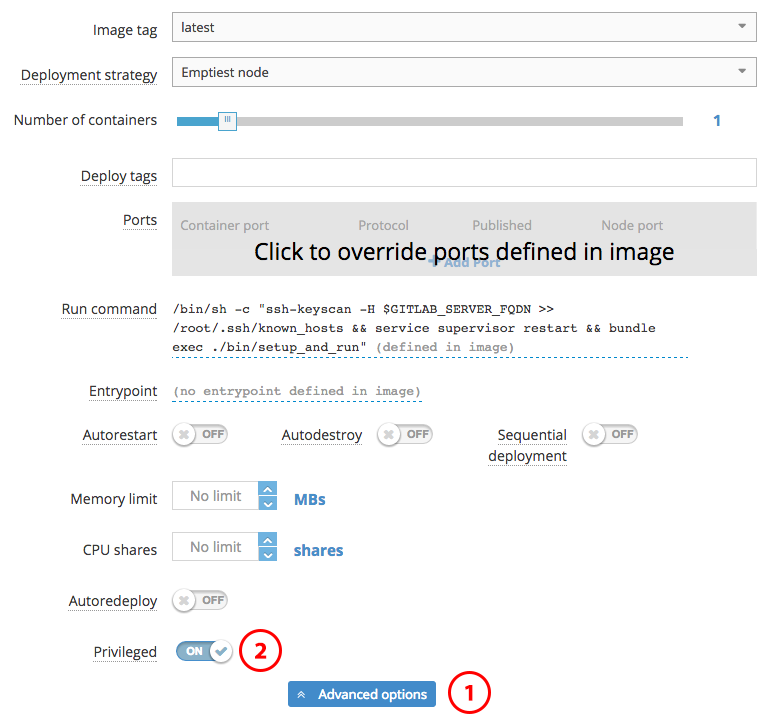

Once you've selected the correct image, you'll be faced with a couple new options regarding scheduling your service containers and basic configuration. For the deployment strategies, it is best to read Tutum's documentation on their behavior, though the default "Emptiest Node" will likely work for the purposes of the CI runner. You also will likely want to leave the number of containers at 1 unless you wish to do parallel builds. If you previously set tags on your Tutum nodes, ensure you enter the correct tags into the "Deploy Tags" field. Essentially, Tutum will try and find a node that satisfies all of the requested "Deploy Tags"-- they are ANDed together.

There is one important setting you must change on this first configuration screen, and it's buried in the "Advanced Options" section-- "Privileged Mode". Docker-in-Docker requires this setting to be enabled, and Tutum fortunately makes it easy to do so.

After configuring privileged mode, you can continue to the next screen-- environmental variable configuration.

Like with the Docker CLI, Tutum allows you to specify environmental variables to be inserted into your Docker containers. However, with Tutum, every deployed container will have the same environmental variables. Though we will not use the links feature for the GitLab CI runner, using Tutum's dynamic links, containers will have access to other containers' environmental variables as well.

There's three important variables that need to be configured:

REGISTRATION_TOKEN: The registration token that you copied earlier from the GitLab CI "Runners" pageCI_SERVER_URL: The CI server URL that was provided on the GitLab CI "Runners" page. If you're using the hosted version of GitLab CI, this is "https://ci.gitlab.com/"GITLAB_SERVER_FQDN: The fully qualified domain name for your GitLab CI instance. This is used to perform assh-keyscan. If you are using the hosted version of GitLab CI, this is "ci.gitlab.com"

After you've configured these variables, it's time to "Create and Deploy" your service.

Once the container finishes launching, you can go back to GitLab CI's "Runners" page and you should see a new entry. You're now ready to setup the GitLab CI job.

Creating a DinD GitLab CI Job

The final step to setup our CI/CD workflow is to add the actual job script to GitLab CI. Now, this will vary depending on your project, but one aspect will remain consistent: you'll end up using Docker to build a Docker container, which is then uploaded to your image repository. In this case, it would be Tutum's private Docker image repository.

GitLab CI jobs can be modified in the "Jobs" tab. Within this section, there are two different subsections-- "Test", and "Deploy". As their names might suggest, the test scripts are generally used to run unit or integration tests. The deploy scripts are only run for specific branches once the tests have completed successfully. This allows you to have a test script that runs for every commit, and a deploy script that runs only for the master branch and once all of the tests have completed successfully.

An example test script might look like the following:

docker login -u [USERNAME] -e [EMAIL] -p="[PASSWORD]" tutum.co

Build the Docker image and tag it for Tutum

docker build -t wizardapps/app .

docker tag -f wizardapps/app tutum.co/wizardapps/app:latest

The above test script doesn't actually run any tests, but it does build a Docker image for our application and tag it properly. If you use this as a starting point for your own test scripts, ensure you change the username, email, and password placeholders for the Tutum registry. Because Tutum provides a private Docker registry, you need to ensure your test runner is authenticated with your credentials.5

Then, we could also have a deploy script that actually pushes the image to Tutum's registry, therefore kicking off the build:

docker push tutum.co/wizardapps/app

Auto-Redeploy

At this point, your system should be up and running for builds, and new code should be built as a container and uploaded to the Tutum registry. All that's left is to create your service on Tutum, and ensure auto-redeploy is enabled.

This is done similarly to how we setup our CI services, though instead of making the service "privileged", we want to enable the "Auto Redeploy" switch. Configure all of your service's ports, environmental variables, links, and volumes, and then just hit deploy.

Congratulations, you now have your own Docker application that is automatically redeployed onto a fleet of servers, but only after the code has been tested!

Other Resources

GitLab CI Multi-Runner: https://github.com/ayufan/gitlab-ci-multi-runner

The setup we have requires one CI "service" on Tutum per project-- this can quickly get out of hand. As an alternative, you can experiment with the GitLab CI Multi-Runner, which takes a configuration file and allows for multiple projects to be built.

- I've got some really cool new stuff coming in ThreeBar-- far beyond just a welcome bar. Feel free to sign up to be notified when it's ready! Once it's live, you can see the power of a CI-deployed-Docker-application yourself. ↩

- Heroku runs on a pricing model where you pay by the hour for resources you use. Each "virtual machine" running your code is called a "Dyno", and can run a single command such as a web server or queue worker. You get ~750 hours of "Dyno" usage each month for free, which means you can run one web server per application on Heroku for free if you wish. ↩

- I have personally used all the following server providers, and I do earn affiliate revenue for some of the links in this blog post. However, any server provider that allows you to run Linux instances should work for setting up your Docker server. ↩

- RunAbove's Sandbox platform is great for these experiments because the servers have a high amount of RAM, SSD storage, and are extremely cheap-- around ~$3 USD per month for a server with 2 GB of RAM. However, they are not covered by an SLA at the moment, so for production traffic you may wish to choose another provider. ↩

- At the moment, you must put your Tutum username and password directly into the job script. Unfortunately Tutum does not provide separate passwords or API keys for the registry, so this solution leaves a potential security hole. ↩

Today, LoopPay announced that they were acquired by Samsung. This wasn't entirely unforeseen, considering rumors have been popping up regarding LoopPay's future integration with Samsung phones. The LoopPay system is a new technology that is tied to the current magnetic stripe credit card system. Using a small loop of wires in a special hardware device, the LoopPay devices essentially mimic the magnetic field caused by a swipe of a credit card, enabling mobile payments at nearly every existing credit card swipe terminal.

The device works like magic-- simply place the Fob or Card next to the magnetic swipe slot on an existing credit card machine, press a button on your LoopPay, and the reader will act as if a physical card was swiped. I've owned one of these devices for a little over a month (specifically, the LoopPay Card) and it has worked flawlessly every time I've used it on a traditional terminal, but there's one big problem that needs to be solved before LoopPay can become a major player in the mobile payments space.

Traditionally, mobile payments require a special NFC terminal and a phone that supports Google Wallet, Apple Pay, or some other alternative platform. With systems such as Apple Pay, which is built into every iPhone 6/6+, NFC payments are only accepted at around 200,000 kiosks. LoopPay doesn't have this issue-- it current supports an estimated 10 million stores because it uses the existing magnetic stripe infrastructure.

Of course, with the iPhone as popular as it is, a significant number of people now know about mobile payments, and you'll find people paying with their phone more often now than they were a year ago. However, due to Apple Pay's dominance in the market, this also leads to some psychological problems when trying to use a new technology such as LoopPay:

Several times I have been told "we don't support that", when offering up my LoopPay Card. The cashier is confused, and despite trying to explain that it will work with their card reader and offering to show them how, I've never gotten them to budge. They have this preconceived notion that their traditional card terminal will only work with actual plastic credit cards, and the public conflicts between retailers and Apple only solidify the idea that a special terminal is needed for anything else. Needless to say, I've never tried handing a server my LoopPay-- without me physically there to guide them, they certainly would be even more perplexed.

In my experience it could be the actual wording I use, but LoopPay actually has a section in their FAQ dedicated to how to sweet talk a cashier into accepting your LoopPay device. The lack of education around this technology is clearly a problem, and there's no easy solution.

LoopPay's acquisition may actually make the situation worse for a while-- if a new crop of phones appears that can make mobile payments at any terminal, this may actually confuse cashiers more. The average cashier may be able to distinguish an iPhone from other devices and know whether they have a terminal that supports Apple Pay, but I certainly don't see them being able to differentiate the new Galaxy S6 (or, whatever future devices come out with LoopPay integrated) and knowing what to do with it.

If LoopPay is simply integrated with the latest round of Samsung phones and people just use it to pay at self-service credit card terminals (that is, where you don't have to hand your card over to another person), it could bring mobile payments to even more retailers without any effort on their part. Personally, I've already replaced my physical credit cards with my LoopPay card for daily purchases.

But, even if you still have to use a physical card at restaurants or when handing it over to another person, LoopPay's integration into Samsung could help smooth the path for mobile payments. Additional features, like one time card tokens (which LoopPay has previously said is in progress for 2015), may also help LoopPay not only become a replacement for your credit card, but a layer of security as well.

Right now, LoopPay is largely a technology toy and something for me to fiddle with, but maybe their new life with Samsung can help them expand to more consumers and accelerate the death of traditional credit card payments.

NOOBS is a system designed by the Raspberry Pi foundation for installing operating systems onto your Raspberry Pi's SD card. Not only are you able to install an operating system with a single click, but you can install it over a network or even install multiple operating systems on multiple partitions.

For example, if you ever wished you could install both Raspbian and OpenELEC, NOOBS not only makes this possible, but easy to switch back and forth between the two.

Requirements

NOOBS requires a couple of things, most of which you probably already have:

- A Raspberry Pi (any model from the original to the newest Raspberry Pi 2)

- An SD card (or a Micro SD for the newer models) that is 4 GB or larger

- A computer with an SD card slot

One thing you must take note of is the 4 GB SD card requirement. If you have a smaller SD card or you simply need a new one, there are several cheap models that you can purchase:

- Full Sized SD Card (for the original Raspberry Pi)

- Sony 16GB SDHC/SDXC Class 10 (~$10)

- SanDisk Ultra 16GB Class 10 SDHC (~$12)

- Micro SD Card (for the Model A+/B+ or newer)

- SanDisk Ultra 16GB UHS-I/Class 10 Micro SDHC (~$8)

- Samsung 16GB EVO Class 10 Micro SDHC with Adapter (~$11)

All of the above micro SD cards come with adapters, so if you want to purchase the micro SD you can use it in your original Raspberry Pi and later move it to a model A+/B+ or newer at a later time.

Preinstalled NOOBS SD Cards

You can also purchase an SD/micro SD card with NOOBS preinstalled. This is more expensive than just loading NOOBS onto an SD card yourself, but is an option for convenience:

Installing NOOBS

First, you must download the NOOBS image from the Raspberry Pi website. There are a couple different options for download:

- NOOBS (offline installer)

- Torrent

- Compressed ZIP

- NOOBS LITE (online installer)

- Torrent

- Compressed ZIP

The offline installer is significantly larger than the "LITE" version, but comes with the ability to reinstall the Raspbian operating system without a network connection. This is because the actual OS image is saved onto the SD card when you install NOOBS for the first time. However, over time the OS image that is included in the NOOBS installation may become outdated unless you have a network connection, in which case the newer OS version will be downloaded and installed.

NOOBS LITE is significantly smaller in size, but requires a network connection to install the OS images. Both the regular NOOBS and NOOBS LITE installers allow for installing from a network.

Once you've downloaded the version of NOOBS you prefer (either the regular version or LITE), then you can simply extract the ZIP file.

Formatting Your SD Card

Before you can actually place NOOBS onto your SD card, you must format it. Note, formatting the card erases all data on it1.

Mac (the easy way)

On the Mac, you can use the built in "Disk Utility" application. Launch "Disk Utility" and insert your SD card into your Mac. You'll see it in the sidebar of the application, along with some items that are indented underneath it (what these items are and the number of them will vary based on how you previously used your SD card).

Ensure you select the root SD card (that is, the top most item), and switch to the "Erase" tab on the right hand pane. Leave the format as "FAT" and choose a name for it, which will be all uppercase letters. Then, simply hit the "Erase..." button in the bottom right to format the card.

Windows or Mac

Because we want to format the entire card and not just a single partition, you may want to use the SD Card Formatter by the SD Association. Once you've downloaded the formatter from their website, insert your SD card and run the formatter tool.

When you open the formatter tool, you'll have a few options-- the SD device to format, the type of format operation, and the new name of the SD card. Make sure you select the right SD card if you have multiple SD cards plugged into your computer. You can choose either format type, though the quick format is usually sufficient if you are not trying to erase sensitive data. Finally, for the name, choose whatever you wish and fit the "format" button.

Copying NOOBS

Once you have a formatted FAT SD card, you can simply copy the files for NOOBS that you extracted earlier. A simple copy and paste is all that is needed. It might take a while to copy the regular version of NOOBS due to the slow speed of SD cards, but once it's done you can eject the card from your computer and insert it into your Raspberry Pi.

Using NOOBS

Installing an OS

Before you plug your Raspberry Pi into the power cable, attach a USB keyboard and mouse so that you can navigate the NOOBS interface. Once you have the keyboard and mouse plugged in, you can turn on the power to your Raspberry Pi and you should be greeted with a screen similar to the following:

This is the operating system installation screen, and allows you to choose one or more OS images to install onto your SD card. Notice the icons on the right hand side-- those OS images with a picture of an SD card are already included with your NOOBS installation, while those with an Ethernet symbol can be downloaded over a network.

Simply check the boxes next to the OS images you wish to install, and hit the install button in the toolbar at the top of the window.

Boot Selection

Once you've installed one or more operating systems with NOOBS, rebooting your Raspberry Pi will result in one of two things happening automatically:

- If you have a single OS installed, it will boot automatically

- If you have more than one OS installed, a boot selector will be displayed

To get back to the OS installation screen, simply hold the "shift" key on your keyboard as you turn on your Raspberry Pi.

Other Resources

If you wish to just install a single operating system, you might find my tutorial on installing the Raspbian OS directly onto your SD card. With this method, the Raspberry Pi will automatically boot into Raspbian, though you do not get any sort of interface for reinstalling the operating system.

Advanced information on the NOOBS system can be found in the GitHub repository. This includes info on silent installation, as well as configuring advanced parameters such as choosing your auto-boot partition or forcing a display mode.

- Technically, if you do not overwrite the data (e.g. use a quick format) then you can recover the data, but I wouldn't count on it... ↩

A couple days ago, the Raspberry Pi foundation released the Raspberry Pi 2. The Raspberry Pi 2 comes with some fantastic new features that I'm looking forward to utilizing, including an upgraded processor and RAM. Even better, the hardware comes in at the same $35 as the original model B.

Where Can You Buy a Raspberry Pi 2?

Unfortunately, the Raspberry Pi 2 sold out pretty quickly from a lot of distributors. However, you can still backorder a Raspberry Pi 2 and you'll receive it as soon as it comes in to your distributor.

- United States

- Newark/Element 14

- Allied Electronics

- Canada

- Newark/Element 14

- UK

- RS

The Things That Haven't Changed

The Raspberry Pi 2 is physically similar to the current model B+, meaning that your existing cases and accessories will likely work on the new device without much (or any) modification. Cases especially will work with your new Raspberry Pi 2 without any modification. The pins are also in the same place, meaning you can continue to use any of your existing projects that connect to those.

Additionally, the micro SD card you use with your current model B+ will physically fit just fine in your new Raspberry Pi 2 (though, you will have to reformat it and reinstall the OS, as noted below). However, remember if you have an original model A/B, you will need to purchase a micro SD card as the original model used a full sized SD card.

The Things That Are Different

One thing to note, if you are upgrading from an original Raspberry Pi (model A, A+, B, or B+)-- the updated processor means that you will not be able to just move your SD card from your old Raspberry Pi to the new one. However, many of the popular operating systems have been updated for the Raspberry Pi 2, including:

- Raspbian

- OpenELEC

- OSMC

- Snappy Ubuntu Core

- Debian Jessie

As usual, these operating systems can be installed manually with their respective OS images (available on the Raspberry Pi website) and using the image flashing method, or with NOOBS.

In the future, you'll also be able to run a version of Windows 10 through the Windows IoT program.

Once my Raspberry Pi 2 arrives, I'll have more information on cool things you can do with the increased power. If you'd like to know when I write a new article, feel free to subscribe to my mailing list.

What better way to start the new year than with a couple quick lessons on new JavaScript features. I'm in the process of writing a series of short lessons on the new ES2015/ES6/ES7 JavaScript features, such as the immensely helpful classes and arrow functions.

For this course, I've also written a series of quizzes that help you keep on your toes-- simply register for an account, navigate to the course page, and click the button titled "Start Taking this Course". You'll be able to take the quizzes and see your results, along with keep tabs on your reading progress. All of this is completely free and you are not added to mailing lists of any kind (the email address is required so that you can reset your password if needed)!

If you wish to receive notifications when I post new JavaScript lessons, you can always sign up for my JavaScript mailing list below. You'll never get any spam and you'll receive notifications when I post new lessons or have a new JavaScript-related tutorial or tools to show you.

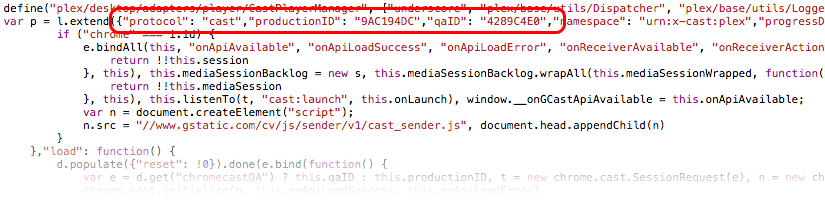

Update: As of October 15th, 2015 (about 10 months after I originally wrote these instructions), Plex has finally removed the hard coded maximum bitrate. This guide will remain for historical reasons, but you should now not be required to follow these steps to stream high bitrate video to your Chromecast.

I am an enthusiastic user of Plex, but recently I discovered that they were making the poor choice of hard-coding a bitrate limitation in their Chromecast application. Essentially, this enforced a 12,000 kbps (~12 mbps) limitation on media, meaning that anything that has a higher bitrate would be transcoded. This isn't a problem when you have a decent server running Plex, but I am running it on an old laptop that can barely keep up with 4 mbps transcodes.

I was able to get around the hard coded limitation (the technical how-I-did-it is also available), and you can do it to:

Requirements

Before you begin, you need a couple of different things:

- Chrome web browser

- A Plex server

- A Chromecast

- A Chromecast developer account

- $5.00 USD (payable to Google for your Chromecast developer account)

- Static file hosting, such as GitHub Gists or Amazon S31

Note, this hack only works in Chrome. It does not allow you to start casting high bitrate content from your iOS or Android devices.

Setting Up Your Chromecast

First, we will setup your Chromecast as a developer device. Because Chromecast applications (custom ones like Plex uses, at least) are vetted through a similar model as a mobile phone app store (i.e. they need to be approved before being runnable by others), we can't just point a Chromecast to your new application. Also, I cannot personally host the modified application because of copyright laws (the code is property of Plex), so you must do the modification yourself.

Before you can actually make the modification, though, you need to turn on developer mode on your Chromecast. You will need a Cast Developer Account, which is used to make your devices "developer" friendly. This has a one time fee of $5.00.

To register for a Cast Developer Account, visit the web console and sign in with your Google account:

https://cast.google.com/publish/

You will see something like the following.